Research with NVIDIA FLARE

Learn how NVIDIA FLARE faciliates research from simulation tools to real-world case studies.

Research Tools

NVIDIA FLARE is an excellent research tool, offering robust simulation capabilities and extensive support for experimentation in federated learning. Many researchers need to simulate federated learning scenarios without setting up an actual federated learning system. NVIDIA FLARE allows for repeated experimentation with different parameters, facilitating quick evaluations and monitoring of results.

NVIDIA FLARE Simulation Tools

Simulator: The Simulator is a multi-threaded/process simulation tool that offers both a Command Line Interface (CLI) and a Python API. It enables the simulation of different numbers of clients and the execution of various federated learning jobs. Once a simulation is complete, users can deploy the same code in production without any changes. Additionally, users can utilize an Integrated Development Environment (IDE) debugger to step through the code for easier debugging.

Proof of Concept (POC) Mode: POC mode simulates real-world deployment on a local host. Clients and servers can be deployed in different directories and launched using separate terminals, each representing a different client or server startup. This mode allows for job submissions to the server as would occur in a real production environment.

Interaction Methods with FL Server

Admin Console: Issue interactive commands such as submitting jobs, listing jobs, and aborting jobs.

Job CLI: Command-line interface is used for job submission.

FLARE API: Allows submission and listing of jobs through Python code.

Experiment Tracking Tools Integration

Experiment tracking: FLARE supports logging metrics using FLARE's metrics tracking log writers. Users can choose from TensorBoard, MLflow, or W&B syntax. Metrics can be streamed to either the FL server or FL client, and changing the metric system does not require any code changes.

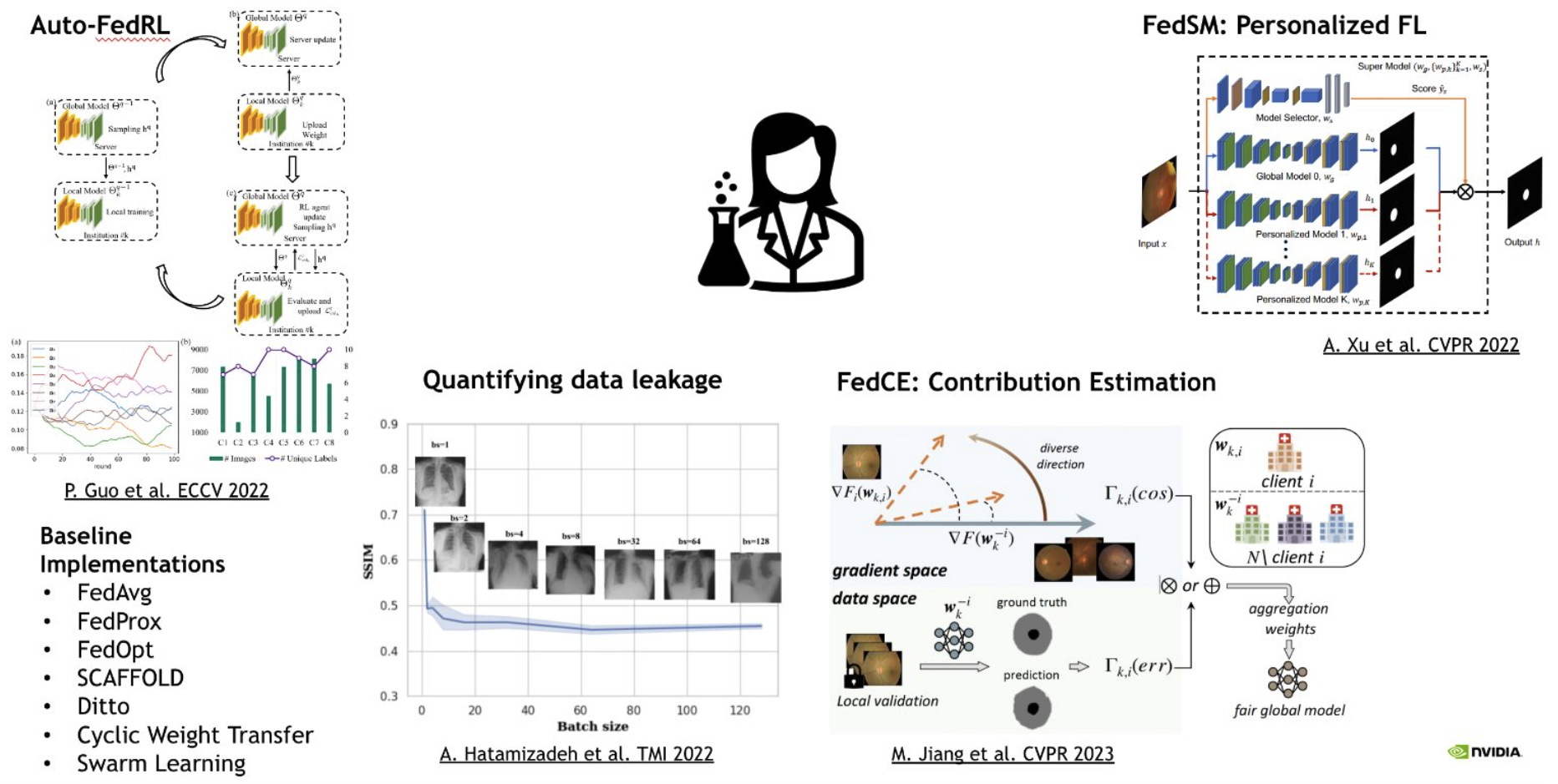

Research Works

NVIDIA FLARE offers a lot of state of art research work, here is a quick view of the recent work. Learn more in the research directory in our GitHub and view our list of Publications.

Case Studies

NVIDIA has worked with several institutions to test and validate the utility of federated learning. We hope this will help to inspire or relate to your research cases.

Here are five real life implementations in healthcare, pushing the envelope for training robust, generalizable AI models:

- EXAM AI Model for Predicting Oxygen Requirements in COVID Patients

- ADOPS (ACR DASA OSU Partners HealthCare Stanford)

- University of Minnesota and Fairview X-Ray COVID AI Model

- SUN Initiative Prostate Cancer AI Model

- CT Pancreas Segmentation AI Model

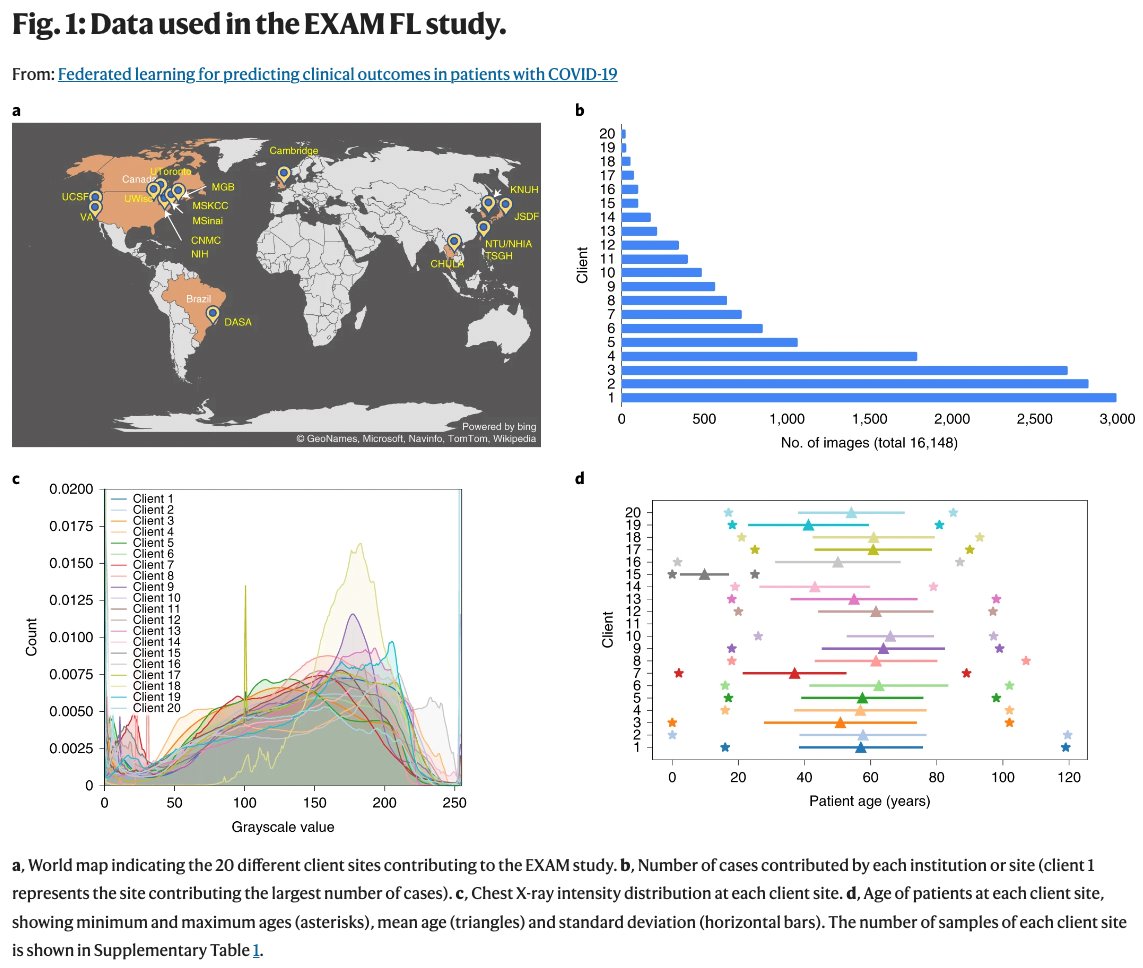

EXAM AI Model for Predicting Oxygen Requirements in COVID Patients

AI model to predict oxygen requirements

NVIDIA researchers, Massachusetts General Brigham Hospital

Training was completed in two weeks and produced a global model with .94 Area Under the Curve (AUC), resulting in excellent prediction for the level of oxygen required by incoming patients.

Federated learning for predicting clinical outcomes in patients with COVID-19

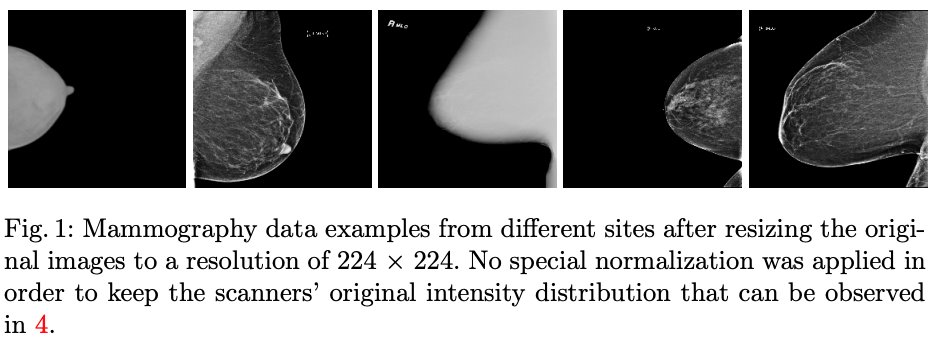

ADOPS (ACR DASA OSU Partners HealthCare Stanford)

Breast Mammography AI Model. Early detection: breast density classification Improvement

The American College of Radiology (ACR), Diagnosticos da America (DASA), Ohio State University (OSU), Partners HealthCare (PHS), and Stanford University

Each institution obtained a better performing model that had an overall superior predictive power on their own local dataset. In doing so, Federated Learning enabled improved breast density classification from mammograms, which could lead to better breast cancer risk assessment.

Federated Learning for Breast Density Classification: A Real-World Implementation

University of Minnesota and Fairview X-Ray COVID AI Model

Improve AI models for COVID-19 diagnosis based on chest X-rays

University of Minnesota and Fairview Mhealth, Indiana University (Indiana, USA), and Emory University (Georgia, USA)

Initial results showed an improvement in performance of the global model of 5% AUROC and 8% AUPRC on the UMN local dataset as compared to the UMN local model.

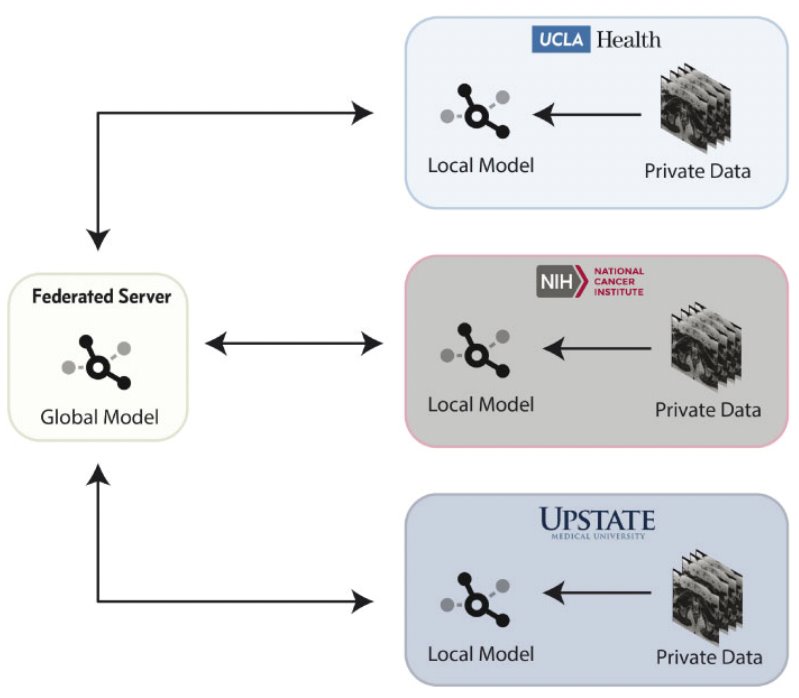

SUN Initiative Prostate Cancer AI Model

Federated segmentation model

SUNY, UCLA, NIH

The results showed equivalent performance from both the experimental Federated Learning and benchmark PD models, showing the feasibility of training an AI model in a Federated Learning approach.

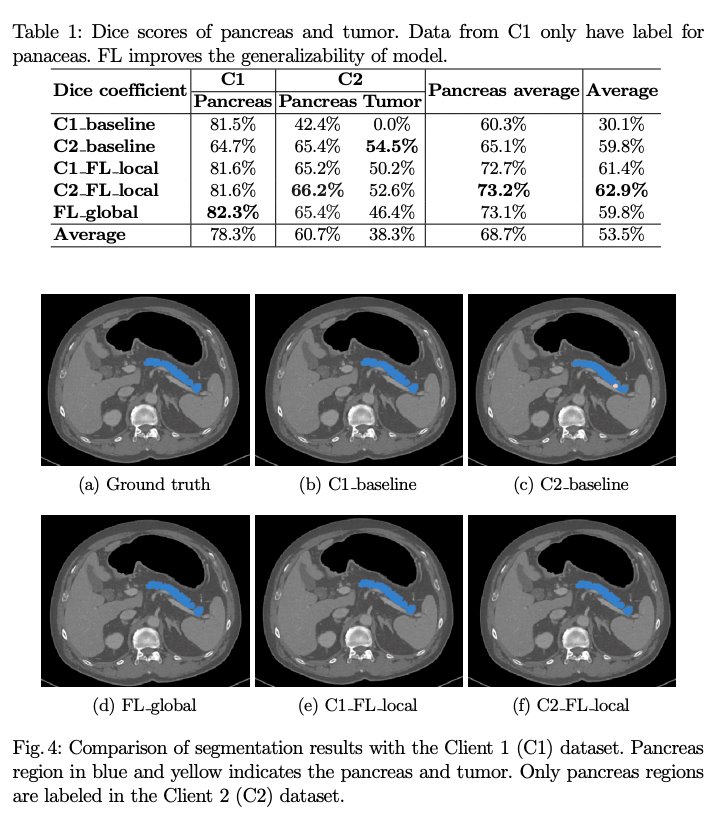

CT Pancreas Segmentation AI Model

Automated segmentation model of the pancreas and pancreatic tumors in abdominal CT

National Taiwan University, Taiwan, and Nagoya University, Japan

The global Federated Learning model achieved a segmentation performance of 82.3% Dice score on healthy pancreatic patients on average.