Multimodal Models from NVIDIA AI Catelog and AI Catalog with LangChain Agent

Prerequisites

To run this notebook, you need the following:

Performed the setup and generated an API key.

Installed Python dependencies from requirements.txt.

Installed additional packages for this notebook:

pip install gradio matplotlib scikit-image

This notebook covers the following custom plug-in components:

LLM using ai-mixtral-8x7b-instruct

A NVIDIA AI Catalog Deplot as one of the tool

A NVIDIA AI Catalog Fuyu as one of the tool

Gradio as the simply User Interface where we will upload a few images

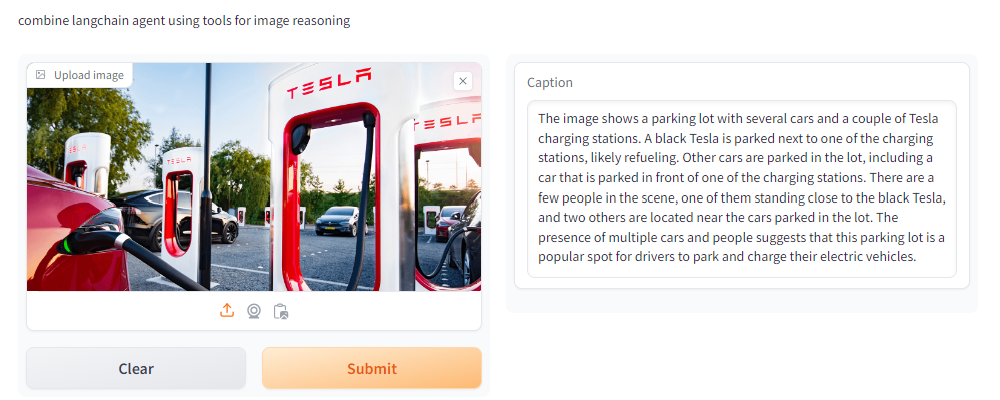

At the end of the day, as below illustrated, we would like to have a UI which allow user to upload image of their choice and have the agent choose tools to do visual reasoning.

Note: As one can see, since we are using NVIDIA AI Catalog as an API, there is no further requirement in the prerequisites about GPUs as compute hardware

# uncomment the below to install additional python packages.

#!pip install unstructured

#!pip install matplotlib scikit-image

!pip install gradio==3.48.0

Step 1 - Export the NVIDIA_API_KEY

You can supply the NVIDIA_API_KEY directly in this notebook when you run the cell below

import getpass

import os

# del os.environ['NVIDIA_API_KEY'] ## delete key and reset

if os.environ.get("NVIDIA_API_KEY", "").startswith("nvapi-"):

print("Valid NVIDIA_API_KEY already in environment. Delete to reset")

else:

nvapi_key = getpass.getpass("NVAPI Key (starts with nvapi-): ")

assert nvapi_key.startswith("nvapi-"), f"{nvapi_key[:5]}... is not a valid key"

os.environ["NVIDIA_API_KEY"] = nvapi_key

global nvapi_key

Step 2 - wrap the Fuyu API call into a function and verify by supplying an image to get a respond

import openai, httpx, sys

import base64, io

from PIL import Image

import requests, json

def fetch_outputs(output):

collect_streaming_outputs=[]

for o in output:

try:

start = o.index('{')

jsonString=o[start:]

d = json.loads(jsonString)

temp=d['choices'][0]['delta']['content']

collect_streaming_outputs.append(temp)

except:

pass

outputs=''.join(collect_streaming_outputs)

return outputs.replace('\\','').replace('\'','')

def img2base64_string(img_path):

image = Image.open(img_path)

if image.width > 800 or image.height > 800:

image.thumbnail((800, 800))

buffered = io.BytesIO()

image.convert("RGB").save(buffered, format="JPEG", quality=85)

image_base64 = base64.b64encode(buffered.getvalue()).decode()

return image_base64

def fuyu(prompt,img_path):

invoke_url = "https://ai.api.nvidia.com/v1/vlm/adept/fuyu-8b"

stream = True

image_b64=img2base64_string(img_path)

assert len(image_b64) < 200_000, \

"To upload larger images, use the assets API (see docs)"

headers = {

"Authorization": f"Bearer {nvapi_key}",

"Accept": "text/event-stream" if stream else "application/json"

}

payload = {

"messages": [

{

"role": "user",

"content": f'{prompt} <img src="data:image/png;base64,{image_b64}" />'

}

],

"max_tokens": 1024,

"temperature": 0.20,

"top_p": 0.70,

"seed": 0,

"stream": stream

}

response = requests.post(invoke_url, headers=headers, json=payload)

if stream:

output=[]

for line in response.iter_lines():

if line:

output.append(line.decode("utf-8"))

else:

output=response.json()

out=fetch_outputs(output)

return out

fetch a test image of a pair of white sneakers and verify the function works

!wget "https://docs.google.com/uc?export=download&id=12ZpBBFkYu-jzz1iz356U5kMikn4uN9ww" -O ./toy_data/jordan.png

img_path="./toy_data/jordan.png"

prompt="describe the image"

out=fuyu(prompt,img_path)

out

Step 3 - we are gonna use mixtral_8x7b model as our main LLM

# test run and see that you can genreate a respond successfully

from langchain_nvidia_ai_endpoints import ChatNVIDIA

llm = ChatNVIDIA(model="ai-mixtral-8x7b-instruct", nvidia_api_key=nvapi_key, max_tokens=1024)

#Set up Prerequisites for Image Captioning App User Interface

import os

import io

import IPython.display

from PIL import Image

import base64

import requests

import gradio as gr

Step 4- wrap Deplot and Fuyu as tools for later usage

#Set up Prerequisites for Image Captioning App User Interface

import os

import io

import IPython.display

from PIL import Image

import base64

import requests

import gradio as gr

from langchain.tools import BaseTool

from transformers import BlipProcessor, BlipForConditionalGeneration, DetrImageProcessor, DetrForObjectDetection

from PIL import Image

import torch

#

import os

from tempfile import NamedTemporaryFile

from langchain.agents import initialize_agent

from langchain.chains.conversation.memory import ConversationBufferWindowMemory

def fetch_outputs(output):

collect_streaming_outputs=[]

for o in output:

try:

start = o.index('{')

jsonString=o[start:]

d = json.loads(jsonString)

temp=d['choices'][0]['delta']['content']

collect_streaming_outputs.append(temp)

except:

pass

outputs=''.join(collect_streaming_outputs)

return outputs.replace('\\','').replace('\'','')

def img2base64_string(img_path):

image = Image.open(img_path)

if image.width > 800 or image.height > 800:

image.thumbnail((800, 800))

buffered = io.BytesIO()

image.convert("RGB").save(buffered, format="JPEG", quality=85)

image_base64 = base64.b64encode(buffered.getvalue()).decode()

return image_base64

class ImageCaptionTool(BaseTool):

name = "Image captioner from Fuyu"

description = "Use this tool when given the path to an image that you would like to be described. " \

"It will return a simple caption describing the image."

def _run(self, img_path):

invoke_url = "https://ai.api.nvidia.com/v1/vlm/adept/fuyu-8b"

stream = True

image_b64=img2base64_string(img_path)

assert len(image_b64) < 200_000, \

"To upload larger images, use the assets API (see docs)"

headers = {

"Authorization": f"Bearer {nvapi_key}",

"Accept": "text/event-stream" if stream else "application/json"

}

payload = {

"messages": [

{

"role": "user",

"content": f'what is in this image <img src="data:image/png;base64,{image_b64}" />'

}

],

"max_tokens": 1024,

"temperature": 0.20,

"top_p": 0.70,

"seed": 0,

"stream": stream

}

response = requests.post(invoke_url, headers=headers, json=payload)

if stream:

output=[]

for line in response.iter_lines():

if line:

output.append(line.decode("utf-8"))

else:

output=response.json()

out=fetch_outputs(output)

return out

def _arun(self, query: str):

raise NotImplementedError("This tool does not support async")

class TabularPlotTool(BaseTool):

name = "Tabular Plot reasoning tool"

description = "Use this tool when given the path to an image that contain bar, pie chart objects. " \

"It will extract and return the tabular data "

def _run(self, img_path):

invoke_url = "https://ai.api.nvidia.com/v1/vlm/google/deplot"

stream = True

image_b64=img2base64_string(img_path)

assert len(image_b64) < 180_000, \

"To upload larger images, use the assets API (see docs)"

headers = {

"Authorization": f"Bearer {nvapi_key}",

"Accept": "text/event-stream" if stream else "application/json"

}

payload = {

"messages": [

{

"role": "user",

"content": f'Generate underlying data table of the figure below: <img src="data:image/png;base64,{image_b64}" />'

}

],

"max_tokens": 1024,

"temperature": 0.20,

"top_p": 0.20,

"stream": stream

}

response = requests.post(invoke_url, headers=headers, json=payload)

if stream:

output=[]

for line in response.iter_lines():

if line:

temp=line.decode("utf-8")

output.append(temp)

#print(temp)

else:

output=response.json()

outputs=fetch_outputs(output)

return outputs

def _arun(self, query: str):

raise NotImplementedError("This tool does not support async")

Step 5 - initaite the agent with tools we previously defined

#initialize the gent

tools = [ImageCaptionTool(),TabularPlotTool()]

conversational_memory = ConversationBufferWindowMemory(

memory_key='chat_history',

k=5,

return_messages=True

)

agent = initialize_agent(

agent="chat-conversational-react-description",

tools=tools,

llm=llm,

max_iterations=5,

verbose=True,

memory=conversational_memory,

handle_parsing_errors=True,

early_stopping_method='generate'

)

Step 6 - verify the agent can indeed use the tools with the supplied image and query

img_path="./toy_data/jordan.png"

response = agent.invoke({"input":f' this is the image path: {img_path}'})

print(response['output'])

def my_agent(img_path):

response = agent.invoke({"input":f'this is the image path: {img_path}'})

return response['output']

Step 7 - wrap the agent into a simple gradio UI so we can interactively upload arbitrary image

import gradio as gr

ImageCaptionApp = gr.Interface(fn=my_agent,

inputs=[gr.Image(label="Upload image", type="filepath")],

outputs=[gr.Textbox(label="Caption")],

title="Image Captioning with langchain agent",

description="combine langchain agent using tools for image reasoning",

allow_flagging="never")

ImageCaptionApp.launch(share=True)