Note

Go to the end to download the full example code.

Model Hook Injection: Perturbation#

Adding model noise by using custom hooks.

This example will demonstrate how to run an ensemble inference workflow to generate a perturbed ensemble forecast. This perturbation is done by injecting code into the model front and rear hooks. These hooks are applied to the tensor data before/after the model forward call.

This example also illustrates how you can subselect data for IO. In this example we will only output two variables: total column water vapor (tcwv) and 500 hPa geopotential (z500). To run this, make sure that the model selected predicts these variables are change appropriately.

In this example you will learn:

How to instantiate a built in prognostic model

Creating a data source and IO object

Changing the model forward/rear hooks

Choose a subselection of coordinates to save to an IO object.

Post-processing results

# /// script

# dependencies = [

# "earth2studio[dlwp] @ git+https://github.com/NVIDIA/earth2studio.git",

# "cartopy",

# ]

# ///

Creating an Ensemble Workflow#

To start let’s begin with creating an ensemble workflow to use. We encourage

users to explore and experiment with their own custom workflows that borrow ideas from

built in workflows inside earth2studio.run or the examples.

Creating our own generalizable ensemble workflow is easy when we rely on the component interfaces defined in Earth2Studio (use dependency injection). Here we create a run method that accepts the following:

time: Input list of datetimes / strings to run inference for

nsteps: Number of forecast steps to predict

nensemble: Number of ensembles to run for

prognostic: Our initialized prognostic model

data: Initialized data source to fetch initial conditions from

io: io store that data is written to.

output_coords: CoordSystem of output coordinates that should be saved. Should be a proper subset of model output coordinates.

Set Up#

With the ensemble workflow defined, we now need to create the individual components.

We need the following:

Prognostic Model: Use the built in DLWP model

earth2studio.models.px.DLWP.Datasource: Pull data from the GFS data api

earth2studio.data.GFS.IO Backend: Save the outputs into a Zarr store

earth2studio.io.ZarrBackend.

We will first run the ensemble workflow using an unmodified function, that is a model that has the default (identity) forward and rear hooks. Then we will define new hooks for the model and rerun the inference request. %%

import os

os.makedirs("outputs", exist_ok=True)

from dotenv import load_dotenv

load_dotenv() # TODO: make common example prep function

import numpy as np

from earth2studio.data import GFS

from earth2studio.io import ZarrBackend

from earth2studio.models.px import DLWP

from earth2studio.perturbation import Gaussian

from earth2studio.run import ensemble

# Load the default model package which downloads the check point from NGC

package = DLWP.load_default_package()

model = DLWP.load_model(package)

# Create the data source

data = GFS()

# Create the IO handler, store in memory

chunks = {"ensemble": 1, "time": 1, "lead_time": 1}

io_unperturbed = ZarrBackend(file_name="outputs/05_ensemble.zarr", chunks=chunks)

Execute the Workflow#

First, we will run the ensemble workflow but with a earth2studio.perturbation.Gaussian()

perturbation as the control.

The workflow will return the provided IO object back to the user, which can be used to then post process. Some have additional APIs that can be handy for post-processing or saving to file. Check the API docs for more information.

nsteps = 4 * 12

nensemble = 16

batch_size = 4

forecast_date = "2024-01-30"

output_coords = {

"lat": np.arange(25.0, 60.0, 0.25),

"lon": np.arange(230.0, 300.0, 0.25),

"variable": np.array(["tcwv", "z500"]),

}

# First run with no model perturbation

io_unperturbed = ensemble(

[forecast_date],

nsteps,

nensemble,

model,

data,

io_unperturbed,

Gaussian(noise_amplitude=0.01),

output_coords=output_coords,

batch_size=batch_size,

)

2026-01-22 19:32:14.134 | INFO | earth2studio.run:ensemble:328 - Running ensemble inference!

2026-01-22 19:32:14.134 | INFO | earth2studio.run:ensemble:336 - Inference device: cuda

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:14.531 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 298404811-863710

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:14.534 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 333455139-848796

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:14.549 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 424587978-1175298

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:14.551 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 402393843-1000454

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:14.553 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 255947617-818002

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:14.555 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 213960931-731790

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:14.557 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 412982869-882301

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

Fetching GFS data: 14%|█▍ | 1/7 [00:00<00:02, 2.08it/s]

Fetching GFS data: 29%|██▊ | 2/7 [00:00<00:01, 3.25it/s]

Fetching GFS data: 100%|██████████| 7/7 [00:01<00:00, 8.20it/s]

Fetching GFS data: 100%|██████████| 7/7 [00:01<00:00, 6.63it/s]

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:16.025 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 407596993-877008

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:16.027 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 297270945-860182

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:16.029 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 419044588-1178697

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:16.031 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 256366833-813140

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:16.033 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 397114008-995975

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:16.035 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 330480536-845677

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:16.037 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 214406883-731397

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

Fetching GFS data: 14%|█▍ | 1/7 [00:00<00:03, 1.60it/s]

Fetching GFS data: 100%|██████████| 7/7 [00:00<00:00, 11.49it/s]

Fetching GFS data: 100%|██████████| 7/7 [00:00<00:00, 9.07it/s]

2026-01-22 19:32:16.824 | SUCCESS | earth2studio.run:ensemble:358 - Fetched data from GFS

2026-01-22 19:32:16.863 | INFO | earth2studio.run:ensemble:386 - Starting 16 Member Ensemble Inference with 4 number of batches.

Total Ensemble Batches: 0%| | 0/4 [00:00<?, ?it/s]

Running batch 0 inference: 0%| | 0/49 [00:00<?, ?it/s]

Running batch 0 inference: 6%|▌ | 3/49 [00:00<00:02, 21.62it/s]

Running batch 0 inference: 12%|█▏ | 6/49 [00:00<00:02, 17.53it/s]

Running batch 0 inference: 16%|█▋ | 8/49 [00:00<00:02, 17.14it/s]

Running batch 0 inference: 20%|██ | 10/49 [00:00<00:02, 16.88it/s]

Running batch 0 inference: 24%|██▍ | 12/49 [00:00<00:02, 16.76it/s]

Running batch 0 inference: 29%|██▊ | 14/49 [00:00<00:02, 16.65it/s]

Running batch 0 inference: 33%|███▎ | 16/49 [00:00<00:01, 16.53it/s]

Running batch 0 inference: 37%|███▋ | 18/49 [00:01<00:01, 16.36it/s]

Running batch 0 inference: 41%|████ | 20/49 [00:01<00:01, 16.32it/s]

Running batch 0 inference: 45%|████▍ | 22/49 [00:01<00:01, 16.28it/s]

Running batch 0 inference: 49%|████▉ | 24/49 [00:01<00:01, 16.23it/s]

Running batch 0 inference: 53%|█████▎ | 26/49 [00:01<00:01, 16.30it/s]

Running batch 0 inference: 57%|█████▋ | 28/49 [00:01<00:01, 16.22it/s]

Running batch 0 inference: 61%|██████ | 30/49 [00:01<00:01, 16.72it/s]

Running batch 0 inference: 67%|██████▋ | 33/49 [00:01<00:00, 18.28it/s]

Running batch 0 inference: 71%|███████▏ | 35/49 [00:02<00:00, 17.74it/s]

Running batch 0 inference: 76%|███████▌ | 37/49 [00:02<00:00, 17.46it/s]

Running batch 0 inference: 80%|███████▉ | 39/49 [00:02<00:00, 17.07it/s]

Running batch 0 inference: 84%|████████▎ | 41/49 [00:02<00:00, 16.79it/s]

Running batch 0 inference: 88%|████████▊ | 43/49 [00:02<00:00, 16.75it/s]

Running batch 0 inference: 92%|█████████▏| 45/49 [00:02<00:00, 16.64it/s]

Running batch 0 inference: 96%|█████████▌| 47/49 [00:02<00:00, 16.56it/s]

Running batch 0 inference: 100%|██████████| 49/49 [00:02<00:00, 16.43it/s]

Total Ensemble Batches: 25%|██▌ | 1/4 [00:02<00:08, 2.91s/it]

Running batch 4 inference: 0%| | 0/49 [00:00<?, ?it/s]

Running batch 4 inference: 4%|▍ | 2/49 [00:00<00:02, 16.39it/s]

Running batch 4 inference: 8%|▊ | 4/49 [00:00<00:02, 16.17it/s]

Running batch 4 inference: 12%|█▏ | 6/49 [00:00<00:02, 16.21it/s]

Running batch 4 inference: 16%|█▋ | 8/49 [00:00<00:02, 16.24it/s]

Running batch 4 inference: 20%|██ | 10/49 [00:00<00:02, 16.26it/s]

Running batch 4 inference: 24%|██▍ | 12/49 [00:00<00:02, 16.21it/s]

Running batch 4 inference: 29%|██▊ | 14/49 [00:00<00:02, 16.19it/s]

Running batch 4 inference: 33%|███▎ | 16/49 [00:00<00:02, 16.16it/s]

Running batch 4 inference: 37%|███▋ | 18/49 [00:01<00:01, 16.17it/s]

Running batch 4 inference: 41%|████ | 20/49 [00:01<00:01, 16.15it/s]

Running batch 4 inference: 45%|████▍ | 22/49 [00:01<00:01, 16.11it/s]

Running batch 4 inference: 49%|████▉ | 24/49 [00:01<00:01, 16.30it/s]

Running batch 4 inference: 53%|█████▎ | 26/49 [00:01<00:01, 16.47it/s]

Running batch 4 inference: 57%|█████▋ | 28/49 [00:01<00:01, 16.38it/s]

Running batch 4 inference: 61%|██████ | 30/49 [00:01<00:01, 16.28it/s]

Running batch 4 inference: 65%|██████▌ | 32/49 [00:01<00:01, 16.22it/s]

Running batch 4 inference: 69%|██████▉ | 34/49 [00:02<00:00, 16.15it/s]

Running batch 4 inference: 73%|███████▎ | 36/49 [00:02<00:00, 16.34it/s]

Running batch 4 inference: 78%|███████▊ | 38/49 [00:02<00:00, 16.34it/s]

Running batch 4 inference: 82%|████████▏ | 40/49 [00:02<00:00, 16.24it/s]

Running batch 4 inference: 86%|████████▌ | 42/49 [00:02<00:00, 16.18it/s]

Running batch 4 inference: 90%|████████▉ | 44/49 [00:02<00:00, 16.13it/s]

Running batch 4 inference: 94%|█████████▍| 46/49 [00:02<00:00, 16.00it/s]

Running batch 4 inference: 98%|█████████▊| 48/49 [00:02<00:00, 15.97it/s]

Total Ensemble Batches: 50%|█████ | 2/4 [00:05<00:05, 2.97s/it]

Running batch 8 inference: 0%| | 0/49 [00:00<?, ?it/s]

Running batch 8 inference: 4%|▍ | 2/49 [00:00<00:02, 15.93it/s]

Running batch 8 inference: 8%|▊ | 4/49 [00:00<00:02, 15.86it/s]

Running batch 8 inference: 12%|█▏ | 6/49 [00:00<00:02, 15.89it/s]

Running batch 8 inference: 16%|█▋ | 8/49 [00:00<00:02, 15.98it/s]

Running batch 8 inference: 20%|██ | 10/49 [00:00<00:02, 16.04it/s]

Running batch 8 inference: 24%|██▍ | 12/49 [00:00<00:02, 16.12it/s]

Running batch 8 inference: 29%|██▊ | 14/49 [00:00<00:02, 16.15it/s]

Running batch 8 inference: 33%|███▎ | 16/49 [00:00<00:02, 16.15it/s]

Running batch 8 inference: 37%|███▋ | 18/49 [00:01<00:01, 16.09it/s]

Running batch 8 inference: 41%|████ | 20/49 [00:01<00:01, 15.99it/s]

Running batch 8 inference: 45%|████▍ | 22/49 [00:01<00:01, 16.08it/s]

Running batch 8 inference: 49%|████▉ | 24/49 [00:01<00:01, 16.05it/s]

Running batch 8 inference: 53%|█████▎ | 26/49 [00:01<00:01, 16.05it/s]

Running batch 8 inference: 57%|█████▋ | 28/49 [00:01<00:01, 16.08it/s]

Running batch 8 inference: 61%|██████ | 30/49 [00:01<00:01, 16.02it/s]

Running batch 8 inference: 65%|██████▌ | 32/49 [00:01<00:01, 16.14it/s]

Running batch 8 inference: 69%|██████▉ | 34/49 [00:02<00:00, 16.09it/s]

Running batch 8 inference: 73%|███████▎ | 36/49 [00:02<00:00, 14.44it/s]

Running batch 8 inference: 78%|███████▊ | 38/49 [00:02<00:00, 14.63it/s]

Running batch 8 inference: 82%|████████▏ | 40/49 [00:02<00:00, 14.85it/s]

Running batch 8 inference: 86%|████████▌ | 42/49 [00:02<00:00, 15.02it/s]

Running batch 8 inference: 90%|████████▉ | 44/49 [00:02<00:00, 15.14it/s]

Running batch 8 inference: 94%|█████████▍| 46/49 [00:02<00:00, 15.29it/s]

Running batch 8 inference: 98%|█████████▊| 48/49 [00:03<00:00, 15.42it/s]

Total Ensemble Batches: 75%|███████▌ | 3/4 [00:09<00:03, 3.04s/it]

Running batch 12 inference: 0%| | 0/49 [00:00<?, ?it/s]

Running batch 12 inference: 4%|▍ | 2/49 [00:00<00:03, 15.40it/s]

Running batch 12 inference: 8%|▊ | 4/49 [00:00<00:02, 15.20it/s]

Running batch 12 inference: 12%|█▏ | 6/49 [00:00<00:02, 15.66it/s]

Running batch 12 inference: 16%|█▋ | 8/49 [00:00<00:02, 15.83it/s]

Running batch 12 inference: 20%|██ | 10/49 [00:00<00:02, 16.00it/s]

Running batch 12 inference: 24%|██▍ | 12/49 [00:00<00:02, 16.31it/s]

Running batch 12 inference: 29%|██▊ | 14/49 [00:00<00:02, 16.34it/s]

Running batch 12 inference: 33%|███▎ | 16/49 [00:00<00:02, 16.40it/s]

Running batch 12 inference: 37%|███▋ | 18/49 [00:01<00:01, 16.11it/s]

Running batch 12 inference: 41%|████ | 20/49 [00:01<00:01, 15.89it/s]

Running batch 12 inference: 45%|████▍ | 22/49 [00:01<00:01, 15.76it/s]

Running batch 12 inference: 49%|████▉ | 24/49 [00:01<00:01, 15.87it/s]

Running batch 12 inference: 53%|█████▎ | 26/49 [00:01<00:01, 15.86it/s]

Running batch 12 inference: 57%|█████▋ | 28/49 [00:01<00:01, 15.97it/s]

Running batch 12 inference: 61%|██████ | 30/49 [00:01<00:01, 16.09it/s]

Running batch 12 inference: 65%|██████▌ | 32/49 [00:02<00:01, 16.00it/s]

Running batch 12 inference: 69%|██████▉ | 34/49 [00:02<00:00, 15.78it/s]

Running batch 12 inference: 73%|███████▎ | 36/49 [00:02<00:00, 15.65it/s]

Running batch 12 inference: 78%|███████▊ | 38/49 [00:02<00:00, 15.63it/s]

Running batch 12 inference: 82%|████████▏ | 40/49 [00:02<00:00, 15.70it/s]

Running batch 12 inference: 86%|████████▌ | 42/49 [00:02<00:00, 15.72it/s]

Running batch 12 inference: 90%|████████▉ | 44/49 [00:02<00:00, 15.68it/s]

Running batch 12 inference: 94%|█████████▍| 46/49 [00:02<00:00, 15.62it/s]

Running batch 12 inference: 98%|█████████▊| 48/49 [00:03<00:00, 15.66it/s]

Total Ensemble Batches: 100%|██████████| 4/4 [00:12<00:00, 3.05s/it]

Total Ensemble Batches: 100%|██████████| 4/4 [00:12<00:00, 3.03s/it]

2026-01-22 19:32:28.987 | SUCCESS | earth2studio.run:ensemble:438 - Inference complete

Now let’s introduce slight model perturbation using the prognostic model hooks defined

in earth2studio.models.px.utils.PrognosticMixin.

Note that center.unsqueeze(-1) is DLWP specific since it operates on a cubed sphere

with grid dimensions (nface, lat, lon) instead of just (lat,lon).

To switch out the model, consider removing the unsqueeze() .

model.front_hook = lambda x, coords: (

x

- 0.1

* x.var(dim=0)

* (x - model.center.unsqueeze(-1))

/ (model.scale.unsqueeze(-1)) ** 2

+ 0.1 * (x - x.mean(dim=0)),

coords,

)

# Also could use model.rear_hook = ...

io_perturbed = ZarrBackend(

file_name="outputs/05_ensemble_model_perturbation.zarr",

chunks=chunks,

backend_kwargs={"overwrite": True},

)

io_perturbed = ensemble(

[forecast_date],

nsteps,

nensemble,

model,

data,

io_perturbed,

Gaussian(noise_amplitude=0.01),

output_coords=output_coords,

batch_size=batch_size,

)

2026-01-22 19:32:28.992 | INFO | earth2studio.run:ensemble:328 - Running ensemble inference!

2026-01-22 19:32:28.993 | INFO | earth2studio.run:ensemble:336 - Inference device: cuda

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.090 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 424587978-1175298

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.101 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 213960931-731790

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.112 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 333455139-848796

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.123 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 255947617-818002

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.134 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 402393843-1000454

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.145 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 412982869-882301

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.156 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240129/18/atmos/gfs.t18z.pgrb2.0p25.f000 298404811-863710

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

Fetching GFS data: 100%|██████████| 7/7 [00:00<00:00, 91.24it/s]

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.247 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 214406883-731397

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.259 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 330480536-845677

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.271 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 407596993-877008

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.282 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 397114008-995975

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.294 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 297270945-860182

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.306 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 419044588-1178697

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

2026-01-22 19:32:29.317 | DEBUG | earth2studio.data.gfs:fetch_array:382 - Fetching GFS grib file: noaa-gfs-bdp-pds/gfs.20240130/00/atmos/gfs.t00z.pgrb2.0p25.f000 256366833-813140

Fetching GFS data: 0%| | 0/7 [00:00<?, ?it/s]

Fetching GFS data: 100%|██████████| 7/7 [00:00<00:00, 86.09it/s]

2026-01-22 19:32:29.353 | SUCCESS | earth2studio.run:ensemble:358 - Fetched data from GFS

2026-01-22 19:32:29.395 | INFO | earth2studio.run:ensemble:386 - Starting 16 Member Ensemble Inference with 4 number of batches.

Total Ensemble Batches: 0%| | 0/4 [00:00<?, ?it/s]

Running batch 0 inference: 0%| | 0/49 [00:00<?, ?it/s]

Running batch 0 inference: 4%|▍ | 2/49 [00:00<00:03, 11.82it/s]

Running batch 0 inference: 8%|▊ | 4/49 [00:00<00:03, 13.64it/s]

Running batch 0 inference: 12%|█▏ | 6/49 [00:00<00:02, 14.45it/s]

Running batch 0 inference: 16%|█▋ | 8/49 [00:00<00:02, 14.66it/s]

Running batch 0 inference: 20%|██ | 10/49 [00:00<00:02, 14.93it/s]

Running batch 0 inference: 24%|██▍ | 12/49 [00:00<00:02, 15.08it/s]

Running batch 0 inference: 29%|██▊ | 14/49 [00:00<00:02, 15.21it/s]

Running batch 0 inference: 33%|███▎ | 16/49 [00:01<00:02, 15.31it/s]

Running batch 0 inference: 37%|███▋ | 18/49 [00:01<00:02, 15.43it/s]

Running batch 0 inference: 41%|████ | 20/49 [00:01<00:01, 15.44it/s]

Running batch 0 inference: 45%|████▍ | 22/49 [00:01<00:01, 15.53it/s]

Running batch 0 inference: 49%|████▉ | 24/49 [00:01<00:01, 15.54it/s]

Running batch 0 inference: 53%|█████▎ | 26/49 [00:01<00:01, 15.50it/s]

Running batch 0 inference: 57%|█████▋ | 28/49 [00:01<00:01, 15.52it/s]

Running batch 0 inference: 61%|██████ | 30/49 [00:01<00:01, 15.58it/s]

Running batch 0 inference: 65%|██████▌ | 32/49 [00:02<00:01, 15.72it/s]

Running batch 0 inference: 69%|██████▉ | 34/49 [00:02<00:00, 15.90it/s]

Running batch 0 inference: 73%|███████▎ | 36/49 [00:02<00:00, 15.93it/s]

Running batch 0 inference: 78%|███████▊ | 38/49 [00:02<00:00, 15.91it/s]

Running batch 0 inference: 82%|████████▏ | 40/49 [00:02<00:00, 15.98it/s]

Running batch 0 inference: 86%|████████▌ | 42/49 [00:02<00:00, 16.01it/s]

Running batch 0 inference: 90%|████████▉ | 44/49 [00:02<00:00, 16.01it/s]

Running batch 0 inference: 94%|█████████▍| 46/49 [00:02<00:00, 16.10it/s]

Running batch 0 inference: 98%|█████████▊| 48/49 [00:03<00:00, 16.13it/s]

Total Ensemble Batches: 25%|██▌ | 1/4 [00:03<00:09, 3.14s/it]

Running batch 4 inference: 0%| | 0/49 [00:00<?, ?it/s]

Running batch 4 inference: 4%|▍ | 2/49 [00:00<00:02, 16.10it/s]

Running batch 4 inference: 8%|▊ | 4/49 [00:00<00:02, 16.12it/s]

Running batch 4 inference: 12%|█▏ | 6/49 [00:00<00:02, 16.11it/s]

Running batch 4 inference: 16%|█▋ | 8/49 [00:00<00:02, 16.08it/s]

Running batch 4 inference: 20%|██ | 10/49 [00:00<00:02, 16.07it/s]

Running batch 4 inference: 24%|██▍ | 12/49 [00:00<00:02, 16.05it/s]

Running batch 4 inference: 29%|██▊ | 14/49 [00:00<00:02, 16.10it/s]

Running batch 4 inference: 33%|███▎ | 16/49 [00:00<00:02, 16.16it/s]

Running batch 4 inference: 37%|███▋ | 18/49 [00:01<00:01, 16.24it/s]

Running batch 4 inference: 41%|████ | 20/49 [00:01<00:01, 16.18it/s]

Running batch 4 inference: 45%|████▍ | 22/49 [00:01<00:01, 16.20it/s]

Running batch 4 inference: 49%|████▉ | 24/49 [00:01<00:01, 16.22it/s]

Running batch 4 inference: 53%|█████▎ | 26/49 [00:01<00:01, 16.20it/s]

Running batch 4 inference: 57%|█████▋ | 28/49 [00:01<00:01, 16.11it/s]

Running batch 4 inference: 61%|██████ | 30/49 [00:01<00:01, 16.06it/s]

Running batch 4 inference: 65%|██████▌ | 32/49 [00:01<00:01, 16.13it/s]

Running batch 4 inference: 69%|██████▉ | 34/49 [00:02<00:00, 16.14it/s]

Running batch 4 inference: 73%|███████▎ | 36/49 [00:02<00:00, 16.07it/s]

Running batch 4 inference: 78%|███████▊ | 38/49 [00:02<00:00, 16.04it/s]

Running batch 4 inference: 82%|████████▏ | 40/49 [00:02<00:00, 15.98it/s]

Running batch 4 inference: 86%|████████▌ | 42/49 [00:02<00:00, 15.72it/s]

Running batch 4 inference: 90%|████████▉ | 44/49 [00:02<00:00, 15.78it/s]

Running batch 4 inference: 94%|█████████▍| 46/49 [00:02<00:00, 15.80it/s]

Running batch 4 inference: 98%|█████████▊| 48/49 [00:02<00:00, 15.92it/s]

Total Ensemble Batches: 50%|█████ | 2/4 [00:06<00:06, 3.08s/it]

Running batch 8 inference: 0%| | 0/49 [00:00<?, ?it/s]

Running batch 8 inference: 4%|▍ | 2/49 [00:00<00:02, 16.47it/s]

Running batch 8 inference: 8%|▊ | 4/49 [00:00<00:02, 16.71it/s]

Running batch 8 inference: 12%|█▏ | 6/49 [00:00<00:02, 16.91it/s]

Running batch 8 inference: 16%|█▋ | 8/49 [00:00<00:02, 16.90it/s]

Running batch 8 inference: 20%|██ | 10/49 [00:00<00:02, 16.99it/s]

Running batch 8 inference: 24%|██▍ | 12/49 [00:00<00:02, 16.89it/s]

Running batch 8 inference: 29%|██▊ | 14/49 [00:00<00:02, 16.71it/s]

Running batch 8 inference: 33%|███▎ | 16/49 [00:00<00:01, 16.76it/s]

Running batch 8 inference: 37%|███▋ | 18/49 [00:01<00:01, 16.83it/s]

Running batch 8 inference: 41%|████ | 20/49 [00:01<00:01, 16.79it/s]

Running batch 8 inference: 45%|████▍ | 22/49 [00:01<00:01, 16.75it/s]

Running batch 8 inference: 49%|████▉ | 24/49 [00:01<00:01, 16.77it/s]

Running batch 8 inference: 53%|█████▎ | 26/49 [00:01<00:01, 16.73it/s]

Running batch 8 inference: 57%|█████▋ | 28/49 [00:01<00:01, 16.90it/s]

Running batch 8 inference: 63%|██████▎ | 31/49 [00:01<00:00, 19.65it/s]

Running batch 8 inference: 69%|██████▉ | 34/49 [00:01<00:00, 19.25it/s]

Running batch 8 inference: 73%|███████▎ | 36/49 [00:02<00:00, 18.65it/s]

Running batch 8 inference: 78%|███████▊ | 38/49 [00:02<00:00, 18.04it/s]

Running batch 8 inference: 82%|████████▏ | 40/49 [00:02<00:00, 17.83it/s]

Running batch 8 inference: 86%|████████▌ | 42/49 [00:02<00:00, 17.51it/s]

Running batch 8 inference: 90%|████████▉ | 44/49 [00:02<00:00, 17.10it/s]

Running batch 8 inference: 94%|█████████▍| 46/49 [00:02<00:00, 16.72it/s]

Running batch 8 inference: 98%|█████████▊| 48/49 [00:02<00:00, 16.43it/s]

Total Ensemble Batches: 75%|███████▌ | 3/4 [00:09<00:02, 2.97s/it]

Running batch 12 inference: 0%| | 0/49 [00:00<?, ?it/s]

Running batch 12 inference: 4%|▍ | 2/49 [00:00<00:02, 16.00it/s]

Running batch 12 inference: 8%|▊ | 4/49 [00:00<00:02, 16.13it/s]

Running batch 12 inference: 12%|█▏ | 6/49 [00:00<00:02, 16.03it/s]

Running batch 12 inference: 16%|█▋ | 8/49 [00:00<00:02, 15.90it/s]

Running batch 12 inference: 20%|██ | 10/49 [00:00<00:02, 15.89it/s]

Running batch 12 inference: 24%|██▍ | 12/49 [00:00<00:02, 15.83it/s]

Running batch 12 inference: 29%|██▊ | 14/49 [00:00<00:02, 15.79it/s]

Running batch 12 inference: 33%|███▎ | 16/49 [00:01<00:02, 15.75it/s]

Running batch 12 inference: 37%|███▋ | 18/49 [00:01<00:01, 15.72it/s]

Running batch 12 inference: 41%|████ | 20/49 [00:01<00:01, 15.72it/s]

Running batch 12 inference: 45%|████▍ | 22/49 [00:01<00:01, 15.63it/s]

Running batch 12 inference: 49%|████▉ | 24/49 [00:01<00:01, 15.63it/s]

Running batch 12 inference: 53%|█████▎ | 26/49 [00:01<00:01, 15.63it/s]

Running batch 12 inference: 57%|█████▋ | 28/49 [00:01<00:01, 15.87it/s]

Running batch 12 inference: 61%|██████ | 30/49 [00:01<00:01, 15.94it/s]

Running batch 12 inference: 65%|██████▌ | 32/49 [00:02<00:01, 16.10it/s]

Running batch 12 inference: 69%|██████▉ | 34/49 [00:02<00:00, 16.17it/s]

Running batch 12 inference: 73%|███████▎ | 36/49 [00:02<00:00, 16.22it/s]

Running batch 12 inference: 78%|███████▊ | 38/49 [00:02<00:00, 16.19it/s]

Running batch 12 inference: 82%|████████▏ | 40/49 [00:02<00:00, 16.20it/s]

Running batch 12 inference: 86%|████████▌ | 42/49 [00:02<00:00, 16.20it/s]

Running batch 12 inference: 90%|████████▉ | 44/49 [00:02<00:00, 16.27it/s]

Running batch 12 inference: 94%|█████████▍| 46/49 [00:02<00:00, 16.27it/s]

Running batch 12 inference: 98%|█████████▊| 48/49 [00:03<00:00, 16.24it/s]

Total Ensemble Batches: 100%|██████████| 4/4 [00:12<00:00, 3.00s/it]

Total Ensemble Batches: 100%|██████████| 4/4 [00:12<00:00, 3.02s/it]

2026-01-22 19:32:41.463 | SUCCESS | earth2studio.run:ensemble:438 - Inference complete

Post Processing#

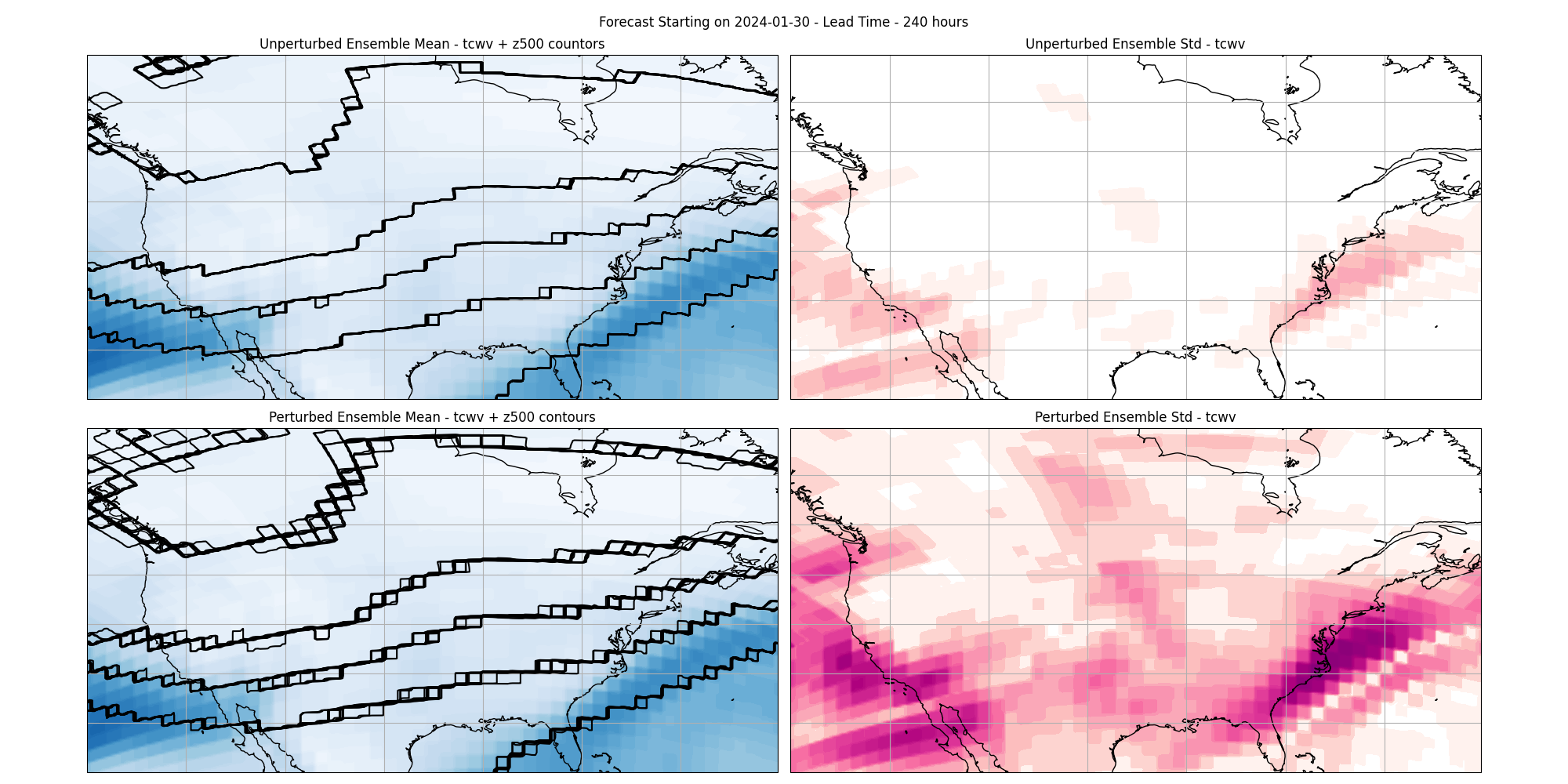

The last step is to post process our results. Here we plot and compare the ensemble mean and standard deviation from using an unperturbed/perturbed model.

Notice that the Zarr IO function has additional APIs to interact with the stored data.

import cartopy.crs as ccrs

import matplotlib.pyplot as plt

from matplotlib.colors import LogNorm

levels_unperturbed = np.linspace(0, io_unperturbed["tcwv"][:].max())

levels_perturbed = np.linspace(0, io_perturbed["tcwv"][:].max())

std_levels_perturbed = np.linspace(0, io_perturbed["tcwv"][:].std(axis=0).max())

plt.close("all")

fig = plt.figure(figsize=(20, 10), tight_layout=True)

ax0 = fig.add_subplot(2, 2, 1, projection=ccrs.PlateCarree())

ax1 = fig.add_subplot(2, 2, 2, projection=ccrs.PlateCarree())

ax2 = fig.add_subplot(2, 2, 3, projection=ccrs.PlateCarree())

ax3 = fig.add_subplot(2, 2, 4, projection=ccrs.PlateCarree())

def update(frame):

"""This function updates the frame with a new lead time for animation."""

import warnings

warnings.filterwarnings("ignore")

ax0.clear()

ax1.clear()

ax2.clear()

ax3.clear()

## Update unperturbed image

im0 = ax0.contourf(

io_unperturbed["lon"][:],

io_unperturbed["lat"][:],

io_unperturbed["tcwv"][:, 0, frame].mean(axis=0),

transform=ccrs.PlateCarree(),

cmap="Blues",

levels=levels_unperturbed,

)

ax0.coastlines()

ax0.gridlines()

im1 = ax1.contourf(

io_unperturbed["lon"][:],

io_unperturbed["lat"][:],

io_unperturbed["tcwv"][:, 0, frame].std(axis=0),

transform=ccrs.PlateCarree(),

cmap="RdPu",

levels=std_levels_perturbed,

norm=LogNorm(vmin=1e-1, vmax=std_levels_perturbed[-1]),

)

ax1.coastlines()

ax1.gridlines()

im2 = ax2.contourf(

io_perturbed["lon"][:],

io_perturbed["lat"][:],

io_perturbed["tcwv"][:, 0, frame].mean(axis=0),

transform=ccrs.PlateCarree(),

cmap="Blues",

levels=levels_perturbed,

)

ax2.coastlines()

ax2.gridlines()

im3 = ax3.contourf(

io_perturbed["lon"][:],

io_perturbed["lat"][:],

io_perturbed["tcwv"][:, 0, frame].std(axis=0),

transform=ccrs.PlateCarree(),

cmap="RdPu",

levels=std_levels_perturbed,

norm=LogNorm(vmin=1e-1, vmax=std_levels_perturbed[-1]),

)

ax3.coastlines()

ax3.gridlines()

for i in range(16):

ax0.contour(

io_unperturbed["lon"][:],

io_unperturbed["lat"][:],

io_unperturbed["z500"][i, 0, frame] / 100.0,

transform=ccrs.PlateCarree(),

levels=np.arange(485, 580, 15),

colors="black",

linestyle="dashed",

)

ax2.contour(

io_perturbed["lon"][:],

io_perturbed["lat"][:],

io_perturbed["z500"][i, 0, frame] / 100.0,

transform=ccrs.PlateCarree(),

levels=np.arange(485, 580, 15),

colors="black",

linestyle="dashed",

)

plt.suptitle(

f'Forecast Starting on {forecast_date} - Lead Time - {io_perturbed["lead_time"][frame]}'

)

ax0.set_title("Unperturbed Ensemble Mean - tcwv + z500 countors")

ax1.set_title("Unperturbed Ensemble Std - tcwv")

ax2.set_title("Perturbed Ensemble Mean - tcwv + z500 contours")

ax3.set_title("Perturbed Ensemble Std - tcwv")

if frame == 0:

plt.colorbar(

im0, ax=ax0, shrink=0.75, pad=0.04, label="kg m^-2", format="%2.1f"

)

plt.colorbar(

im1, ax=ax1, shrink=0.75, pad=0.04, label="kg m^-2", format="%1.2e"

)

plt.colorbar(

im2, ax=ax2, shrink=0.75, pad=0.04, label="kg m^-2", format="%2.1f"

)

plt.colorbar(

im3, ax=ax3, shrink=0.75, pad=0.04, label="kg m^-2", format="%1.2e"

)

# Uncomment this for animation

# import matplotlib.animation as animation

# update(0)

# ani = animation.FuncAnimation(

# fig=fig, func=update, frames=range(1, nsteps), cache_frame_data=False

# )

# ani.save(f"outputs/05_model_perturbation_{forecast_date}.gif", dpi=300)

for lt in [10, 20, 30, 40]:

update(lt)

plt.savefig(

f"outputs/05_model_perturbation_{forecast_date}_leadtime_{lt}.png",

dpi=300,

bbox_inches="tight",

)

Total running time of the script: (0 minutes 38.778 seconds)