Using Quantum Hardware Providers¶

CUDA-Q contains support for using a set of hardware providers (Amazon Braket, Infleqtion, IonQ, IQM, OQC, ORCA Computing, Quantinuum, and QuEra Computing). For more information about executing quantum kernels on different hardware backends, please take a look at hardware.

Amazon Braket¶

The following code illustrates how to run kernels on Amazon Braket’s backends.

import cudaq

# NOTE: Amazon Braket credentials must be set before running this program.

# Amazon Braket costs apply.

cudaq.set_target("braket")

# The default device is SV1, state vector simulator. Users may choose any of

# the available devices by supplying its `ARN` with the `machine` parameter.

# For example,

# ```

# cudaq.set_target("braket", machine="arn:aws:braket:eu-north-1::device/qpu/iqm/Garnet")

# ```

# Create the kernel we'd like to execute

@cudaq.kernel

def kernel():

qvector = cudaq.qvector(2)

h(qvector[0])

x.ctrl(qvector[0], qvector[1])

# Execute and print out the results.

# Option A:

# By using the asynchronous `cudaq.sample_async`, the remaining

# classical code will be executed while the job is being handled

# by Amazon Braket.

async_results = cudaq.sample_async(kernel)

# ... more classical code to run ...

async_counts = async_results.get()

print(async_counts)

# Option B:

# By using the synchronous `cudaq.sample`, the execution of

# any remaining classical code in the file will occur only

# after the job has been returned from Amazon Braket.

counts = cudaq.sample(kernel)

print(counts)

// Compile and run with:

// ```

// nvq++ --target braket braket.cpp -o out.x && ./out.x

// ```

// This will submit the job to the Amazon Braket state vector simulator

// (default). Alternatively, users can choose any of the available devices by

// specifying its `ARN` with the `--braket-machine`, e.g.,

// ```

// nvq++ --target braket --braket-machine \

// "arn:aws:braket:eu-north-1::device/qpu/iqm/Garnet" braket.cpp -o out.x

// ./out.x

// ```

// Assumes a valid set of credentials have been set prior to execution.

#include <cudaq.h>

#include <fstream>

// Define a simple quantum kernel to execute on Amazon Braket.

struct ghz {

// Maximally entangled state between 5 qubits.

auto operator()() __qpu__ {

cudaq::qvector q(5);

h(q[0]);

for (int i = 0; i < 4; i++) {

x<cudaq::ctrl>(q[i], q[i + 1]);

}

mz(q);

}

};

int main() {

// Submit asynchronously (e.g., continue executing

// code in the file until the job has been returned).

auto future = cudaq::sample_async(ghz{});

// ... classical code to execute in the meantime ...

// Get the results of the read in future.

auto async_counts = future.get();

async_counts.dump();

// OR: Submit synchronously (e.g., wait for the job

// result to be returned before proceeding).

auto counts = cudaq::sample(ghz{});

counts.dump();

}

Anyon Technologies¶

The following code illustrates how to run kernels on Anyon’s backends.

import cudaq

# You only have to set the target once! No need to redefine it

# for every execution call on your kernel.

# To use different targets in the same file, you must update

# it via another call to `cudaq.set_target()`

# To use the Anyon target you will need to set up credentials in `~/.anyon_config`

# The configuration file should contain your Anyon Technologies username and password:

# credentials: {"username":"<username>","password":"<password>"}

# Set the target to the default QPU

cudaq.set_target("anyon")

# You can specify a specific machine via the machine parameter:

# ```

# cudaq.set_target("anyon", machine="telegraph-8q")

# ```

# or for the larger system:

# ```

# cudaq.set_target("anyon", machine="berkeley-25q")

# ```

# Create the kernel we'd like to execute on Anyon.

@cudaq.kernel

def ghz():

"""Maximally entangled state between 5 qubits."""

q = cudaq.qvector(5)

h(q[0])

for i in range(4):

x.ctrl(q[i], q[i + 1])

return mz(q)

# Execute on Anyon and print out the results.

# Option A (recommended):

# By using the asynchronous `cudaq.sample_async`, the remaining

# classical code will be executed while the job is being handled

# remotely on Anyon's superconducting QPU. This is ideal for

# longer running jobs.

future = cudaq.sample_async(ghz)

# ... classical optimization code can run while job executes ...

# Can write the future to file:

with open("future.txt", "w") as outfile:

print(future, file=outfile)

# Then come back and read it in later.

with open("future.txt", "r") as infile:

restored_future = cudaq.AsyncSampleResult(infile.read())

# Get the results of the restored future.

async_counts = restored_future.get()

print("Asynchronous results:")

async_counts.dump()

# Option B:

# By using the synchronous `cudaq.sample`, the kernel

# will be executed on Anyon and the calling thread will be blocked

# until the results are returned.

counts = cudaq.sample(ghz)

print("\nSynchronous results:")

counts.dump()

// Compile and run with:

// ```

// nvq++ --target anyon anyon.cpp -o out.x && ./out.x

// ```

// This will submit the job to Anyon's default superconducting QPU.

// You can specify a specific machine via the `--anyon-machine` flag:

// ```

// nvq++ --target anyon --anyon-machine telegraph-8q anyon.cpp -o out.x &&

// ./out.x

// ```

// or for the larger system:

// ```

// nvq++ --target anyon --anyon-machine berkeley-25q anyon.cpp -o out.x &&

// ./out.x

// ```

//

// To use this target you will need to set up credentials in `~/.anyon_config`

// The configuration file should contain your Anyon Technologies username and

// password:

// ```

// credential:<username>:<password>

// ```

#include <cudaq.h>

#include <fstream>

// Define a quantum kernel to execute on Anyon backend.

struct ghz {

// Maximally entangled state between 5 qubits.

auto operator()() __qpu__ {

cudaq::qvector q(5);

h(q[0]);

for (int i = 0; i < 4; i++) {

x<cudaq::ctrl>(q[i], q[i + 1]);

}

mz(q);

}

};

int main() {

// Submit asynchronously

auto future = cudaq::sample_async(ghz{});

// ... classical optimization code can run while job executes ...

// Can write the future to file:

{

std::ofstream out("saveMe.json");

out << future;

}

// Then come back and read it in later.

cudaq::async_result<cudaq::sample_result> readIn;

std::ifstream in("saveMe.json");

in >> readIn;

// Get the results of the read in future.

auto async_counts = readIn.get();

async_counts.dump();

// OR: Submit to synchronously

auto counts = cudaq::sample(ghz{});

counts.dump();

return 0;

}

Infleqtion¶

The following code illustrates how to run kernels on Infleqtion’s backends.

import cudaq

# You only have to set the target once! No need to redefine it

# for every execution call on your kernel.

# To use different targets in the same file, you must update

# it via another call to `cudaq.set_target()`

cudaq.set_target("infleqtion")

# Create the kernel we'd like to execute on Infleqtion.

@cudaq.kernel

def kernel():

qvector = cudaq.qvector(2)

h(qvector[0])

x.ctrl(qvector[0], qvector[1])

# Note: All measurements must be terminal when performing the sampling.

# Execute on Infleqtion and print out the results.

# Option A (recommended):

# By using the asynchronous `cudaq.sample_async`, the remaining

# classical code will be executed while the job is being handled

# by the Superstaq API. This is ideal when submitting via a queue

# over the cloud.

async_results = cudaq.sample_async(kernel)

# ... more classical code to run ...

# We can either retrieve the results later in the program with

# ```

# async_counts = async_results.get()

# ```

# or we can also write the job reference (`async_results`) to

# a file and load it later or from a different process.

file = open("future.txt", "w")

file.write(str(async_results))

file.close()

# We can later read the file content and retrieve the job

# information and results.

same_file = open("future.txt", "r")

retrieved_async_results = cudaq.AsyncSampleResult(str(same_file.read()))

counts = retrieved_async_results.get()

print(counts)

# Option B:

# By using the synchronous `cudaq.sample`, the execution of

# any remaining classical code in the file will occur only

# after the job has been returned from Superstaq.

counts = cudaq.sample(kernel)

print(counts)

// Compile and run with:

// ```

// nvq++ --target infleqtion infleqtion.cpp -o out.x && ./out.x

// ```

// This will submit the job to the ideal simulator for Infleqtion,

// `cq_sqale_simulator` (default). Alternatively, we can enable hardware noise

// model simulation by specifying `noise-sim` to the flag `--infleqtion-method`,

// e.g.,

// ```

// nvq++ --target infleqtion --infleqtion-machine cq_sqale_qpu

// --infleqtion-method noise-sim infleqtion.cpp -o out.x && ./out.x

// ```

// where "noise-sim" instructs Superstaq to perform a noisy emulation of the

// QPU. An ideal dry-run execution on the QPU may be performed by passing

// `dry-run` to the `--infleqtion-method` flag, e.g.,

// ```

// nvq++ --target infleqtion --infleqtion-machine cq_sqale_qpu

// --infleqtion-method dry-run infleqtion.cpp -o out.x && ./out.x

// ```

// Note: If targeting ideal cloud simulation,

// `--infleqtion-machine cq_sqale_simulator` is optional since it is the

// default configuration if not provided.

#include <cudaq.h>

#include <fstream>

// Define a simple quantum kernel to execute on Infleqtion backends.

struct ghz {

// Maximally entangled state between 5 qubits.

auto operator()() __qpu__ {

cudaq::qvector q(5);

h(q[0]);

for (int i = 0; i < 4; i++) {

x<cudaq::ctrl>(q[i], q[i + 1]);

}

mz(q);

}

};

int main() {

// Submit to Infleqtion asynchronously (e.g., continue executing

// code in the file until the job has been returned).

auto future = cudaq::sample_async(ghz{});

// ... classical code to execute in the meantime ...

// Can write the future to file:

{

std::ofstream out("saveMe.json");

out << future;

}

// Then come back and read it in later.

cudaq::async_result<cudaq::sample_result> readIn;

std::ifstream in("saveMe.json");

in >> readIn;

// Get the results of the read in future.

auto async_counts = readIn.get();

async_counts.dump();

// OR: Submit to Infleqtion synchronously (e.g., wait for the job

// result to be returned before proceeding).

auto counts = cudaq::sample(ghz{});

counts.dump();

}

IonQ¶

The following code illustrates how to run kernels on IonQ’s backends.

import cudaq

# You only have to set the target once! No need to redefine it

# for every execution call on your kernel.

# To use different targets in the same file, you must update

# it via another call to `cudaq.set_target()`

cudaq.set_target("ionq")

# Create the kernel we'd like to execute on IonQ.

@cudaq.kernel

def kernel():

qvector = cudaq.qvector(2)

h(qvector[0])

x.ctrl(qvector[0], qvector[1])

# Note: All qubits will be measured at the end upon performing

# the sampling. You may encounter a pre-flight error on IonQ

# backends if you include explicit measurements.

# Execute on IonQ and print out the results.

# Option A:

# By using the asynchronous `cudaq.sample_async`, the remaining

# classical code will be executed while the job is being handled

# by IonQ. This is ideal when submitting via a queue over

# the cloud.

async_results = cudaq.sample_async(kernel)

# ... more classical code to run ...

# We can either retrieve the results later in the program with

# ```

# async_counts = async_results.get()

# ```

# or we can also write the job reference (`async_results`) to

# a file and load it later or from a different process.

file = open("future.txt", "w")

file.write(str(async_results))

file.close()

# We can later read the file content and retrieve the job

# information and results.

same_file = open("future.txt", "r")

retrieved_async_results = cudaq.AsyncSampleResult(str(same_file.read()))

counts = retrieved_async_results.get()

print(counts)

# Option B:

# By using the synchronous `cudaq.sample`, the execution of

# any remaining classical code in the file will occur only

# after the job has been returned from IonQ.

counts = cudaq.sample(kernel)

print(counts)

// Compile and run with:

// ```

// nvq++ --target ionq ionq.cpp -o out.x && ./out.x

// ```

// This will submit the job to the IonQ ideal simulator target (default).

// Alternatively, we can enable hardware noise model simulation by specifying

// the `--ionq-noise-model`, e.g.,

// ```

// nvq++ --target ionq --ionq-machine simulator --ionq-noise-model aria-1

// ionq.cpp -o out.x && ./out.x

// ```

// where we set the noise model to mimic the 'aria-1' hardware device.

// Please refer to your IonQ Cloud dashboard for the list of simulator noise

// models.

// Note: `--ionq-machine simulator` is optional since 'simulator' is the

// default configuration if not provided. Assumes a valid set of credentials

// have been stored.

#include <cudaq.h>

#include <fstream>

// Define a simple quantum kernel to execute on IonQ.

struct ghz {

// Maximally entangled state between 5 qubits.

auto operator()() __qpu__ {

cudaq::qvector q(5);

h(q[0]);

for (int i = 0; i < 4; i++) {

x<cudaq::ctrl>(q[i], q[i + 1]);

}

mz(q);

}

};

int main() {

// Submit to IonQ asynchronously (e.g., continue executing

// code in the file until the job has been returned).

auto future = cudaq::sample_async(ghz{});

// ... classical code to execute in the meantime ...

// Can write the future to file:

{

std::ofstream out("saveMe.json");

out << future;

}

// Then come back and read it in later.

cudaq::async_result<cudaq::sample_result> readIn;

std::ifstream in("saveMe.json");

in >> readIn;

// Get the results of the read in future.

auto async_counts = readIn.get();

async_counts.dump();

// OR: Submit to IonQ synchronously (e.g., wait for the job

// result to be returned before proceeding).

auto counts = cudaq::sample(ghz{});

counts.dump();

}

IQM¶

The following code illustrates how to run kernels on IQM’s backends.

import cudaq

# You only have to set the target once! No need to redefine it

# for every execution call on your kernel.

# To use different targets in the same file, you must update

# it via another call to `cudaq.set_target()`

cudaq.set_target("iqm", url="http://localhost/")

# Crystal_5 QPU architecture:

# QB1

# |

# QB2 - QB3 - QB4

# |

# QB5

# Create the kernel we'd like to execute on IQM.

@cudaq.kernel

def kernel():

qvector = cudaq.qvector(5)

h(qvector[2]) # QB3

x.ctrl(qvector[2], qvector[0])

mz(qvector)

# Execute on IQM Server and print out the results.

# Option A:

# By using the asynchronous `cudaq.sample_async`, the remaining

# classical code will be executed while the job is being handled

# by IQM Server. This is ideal when submitting via a queue over

# the cloud.

async_results = cudaq.sample_async(kernel)

# ... more classical code to run ...

# We can either retrieve the results later in the program with

# ```

# async_counts = async_results.get()

# ```

# or we can also write the job reference (`async_results`) to

# a file and load it later or from a different process.

file = open("future.txt", "w")

file.write(str(async_results))

file.close()

# We can later read the file content and retrieve the job

# information and results.

same_file = open("future.txt", "r")

retrieved_async_results = cudaq.AsyncSampleResult(str(same_file.read()))

counts = retrieved_async_results.get()

print(counts)

# Option B:

# By using the synchronous `cudaq.sample`, the execution of

# any remaining classical code in the file will occur only

# after the job has been returned from IQM Server.

counts = cudaq.sample(kernel)

print(counts)

// Compile and run with:

// ```

// nvq++ --target iqm iqm.cpp -o out.x && ./out.x

// ```

// Assumes a valid set of credentials have been stored.

#include <cudaq.h>

#include <fstream>

// Define a simple quantum kernel to execute on IQM Server.

struct crystal_5_ghz {

// Maximally entangled state between 5 qubits on Crystal_5 QPU.

// QB1

// |

// QB2 - QB3 - QB4

// |

// QB5

void operator()() __qpu__ {

cudaq::qvector q(5);

h(q[0]);

// Note that the CUDA-Q compiler will automatically generate the

// necessary instructions to swap qubits to satisfy the required

// connectivity constraints for the Crystal_5 QPU. In this program, that

// means that despite QB1 not being physically connected to QB2, the user

// can still perform joint operations q[0] and q[1] because the compiler

// will automatically (and transparently) inject the necessary swap

// instructions to execute the user's program without the user having to

// worry about the physical constraints.

for (int i = 0; i < 4; i++) {

x<cudaq::ctrl>(q[i], q[i + 1]);

}

mz(q);

}

};

int main() {

// Submit to IQM Server asynchronously. E.g, continue executing

// code in the file until the job has been returned.

auto future = cudaq::sample_async(crystal_5_ghz{});

// ... classical code to execute in the meantime ...

// Can write the future to file:

{

std::ofstream out("saveMe.json");

out << future;

}

// Then come back and read it in later.

cudaq::async_result<cudaq::sample_result> readIn;

std::ifstream in("saveMe.json");

in >> readIn;

// Get the results of the read in future.

auto async_counts = readIn.get();

async_counts.dump();

// OR: Submit to IQM Server synchronously. E.g, wait for the job

// result to be returned before proceeding.

auto counts = cudaq::sample(crystal_5_ghz{});

counts.dump();

}

OQC¶

The following code illustrates how to run kernels on OQC’s backends.

import cudaq

# You only have to set the target once! No need to redefine it

# for every execution call on your kernel.

# To use different targets in the same file, you must update

# it via another call to `cudaq.set_target()`

# To use the OQC target you will need to set the following environment variables

# OQC_URL

# OQC_EMAIL

# OQC_PASSWORD

# To setup an account, contact oqc_qcaas_support@oxfordquantumcircuits.com

cudaq.set_target("oqc")

# Create the kernel we'd like to execute on OQC.

@cudaq.kernel

def kernel():

qvector = cudaq.qvector(2)

h(qvector[0])

x.ctrl(qvector[0], qvector[1])

mz(qvector)

# Option A:

# By using the asynchronous `cudaq.sample_async`, the remaining

# classical code will be executed while the job is being handled

# by OQC. This is ideal when submitting via a queue over

# the cloud.

async_results = cudaq.sample_async(kernel)

# ... more classical code to run ...

# We can either retrieve the results later in the program with

# ```

# async_counts = async_results.get()

# ```

# or we can also write the job reference (`async_results`) to

# a file and load it later or from a different process.

file = open("future.txt", "w")

file.write(str(async_results))

file.close()

# We can later read the file content and retrieve the job

# information and results.

same_file = open("future.txt", "r")

retrieved_async_results = cudaq.AsyncSampleResult(str(same_file.read()))

counts = retrieved_async_results.get()

print(counts)

# Option B:

# By using the synchronous `cudaq.sample`, the execution of

# any remaining classical code in the file will occur only

# after the job has been returned from OQC.

counts = cudaq.sample(kernel)

print(counts)

// Compile and run with:

// ```

// nvq++ --target oqc oqc.cpp -o out.x && ./out.x

// ```

// This will submit the job to the OQC platform. You can also specify

// the machine to use via the `--oqc-machine` flag:

// ```

// nvq++ --target oqc --oqc-machine lucy oqc.cpp -o out.x && ./out.x

// ```

// The default is the 8 qubit Lucy device. You can set this to be either

// `toshiko` or `lucy` via this flag.

//

// To use the OQC target you will need to set the following environment

// variables: OQC_URL OQC_EMAIL OQC_PASSWORD To setup an account, contact

// oqc_qcaas_support@oxfordquantumcircuits.com

#include <cudaq.h>

#include <fstream>

// Define a simple quantum kernel to execute on OQC backends.

struct bell_state {

auto operator()() __qpu__ {

cudaq::qvector q(2);

h(q[0]);

x<cudaq::ctrl>(q[0], q[1]);

}

};

int main() {

// Submit to OQC asynchronously (e.g., continue executing

// code in the file until the job has been returned).

auto future = cudaq::sample_async(bell_state{});

// ... classical code to execute in the meantime ...

// Can write the future to file:

{

std::ofstream out("future.json");

out << future;

}

// Then come back and read it in later.

cudaq::async_result<cudaq::sample_result> readIn;

std::ifstream in("future.json");

in >> readIn;

// Get the results of the read in future.

auto async_counts = readIn.get();

async_counts.dump();

// OR: Submit to OQC synchronously (e.g., wait for the job

// result to be returned before proceeding).

auto counts = cudaq::sample(bell_state{});

counts.dump();

}

ORCA Computing¶

The following code illustrates how to run kernels on ORCA Computing’s backends.

ORCA Computing’s PT Series implement the boson sampling model of quantum computation, in which multiple photons are interfered with each other within a network of beam splitters, and photon detectors measure where the photons leave this network.

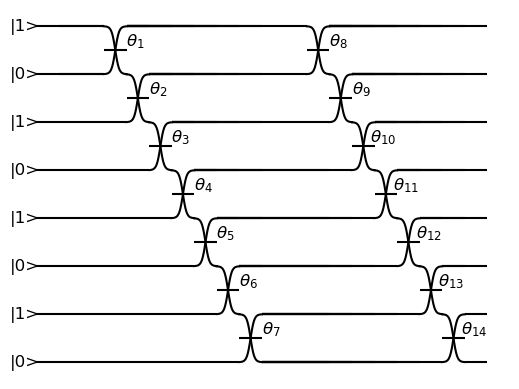

The following image shows the schematic of a Time Bin Interferometer (TBI) boson sampling experiment that runs on ORCA Computing’s backends. A TBI uses optical delay lines with reconfigurable coupling parameters. A TBI can be represented by a circuit diagram, like the one below, where this illustration example corresponds to 4 photons in 8 modes sent into alternating time-bins in a circuit composed of two delay lines in series.

The parameters needed to define the time bin interferometer are the the input state, the loop lengths, beam splitter angles, and optionally the phase shifter angles, and the number of samples. The input state is the initial state of the photons in the time bin interferometer, the left-most entry corresponds to the first mode entering the loop. The loop lengths are the lengths of the different loops in the time bin interferometer. The beam splitter angles and the phase shifter angles are controllable parameters of the time bin interferometer.

This experiment is performed on ORCA’s backends by the code below.

import cudaq

import time

import numpy as np

import os

# You only have to set the target once! No need to redefine it

# for every execution call on your kernel.

# To use different targets in the same file, you must update

# it via another call to `cudaq.set_target()`

# To use the ORCA Computing target you will need to set the ORCA_ACCESS_URL

# environment variable or pass a URL.

orca_url = os.getenv("ORCA_ACCESS_URL", "http://localhost/sample")

cudaq.set_target("orca", url=orca_url)

# A time-bin boson sampling experiment: An input state of 4 indistinguishable

# photons mixed with 4 vacuum states across 8 time bins (modes) enter the

# time bin interferometer (TBI). The interferometer is composed of two loops

# each with a beam splitter (and optionally with a corresponding phase

# shifter). Each photon can either be stored in a loop to interfere with the

# next photon or exit the loop to be measured. Since there are 8 time bins

# and 2 loops, there is a total of 14 beam splitters (and optionally 14 phase

# shifters) in the interferometer, which is the number of controllable

# parameters.

# half of 8 time bins is filled with a single photon and the other half is

# filled with the vacuum state (empty)

input_state = [1, 0, 1, 0, 1, 0, 1, 0]

# The time bin interferometer in this example has two loops, each of length 1

loop_lengths = [1, 1]

# Calculate the number of beam splitters and phase shifters

n_beam_splitters = len(loop_lengths) * len(input_state) - sum(loop_lengths)

# beam splitter angles

bs_angles = np.linspace(np.pi / 3, np.pi / 6, n_beam_splitters)

# Optionally, we can also specify the phase shifter angles, if the system

# includes phase shifters

# ```

# ps_angles = np.linspace(np.pi / 3, np.pi / 5, n_beam_splitters)

# ```

# we can also set number of requested samples

n_samples = 10000

# Option A:

# By using the synchronous `cudaq.orca.sample`, the execution of

# any remaining classical code in the file will occur only

# after the job has been returned from ORCA Server.

print("Submitting to ORCA Server synchronously")

counts = cudaq.orca.sample(input_state, loop_lengths, bs_angles, n_samples)

# If the system includes phase shifters, the phase shifter angles can be

# included in the call

# ```

# counts = cudaq.orca.sample(input_state, loop_lengths, bs_angles, ps_angles,

# n_samples)

# ```

# Print the results

print(counts)

# Option B:

# By using the asynchronous `cudaq.orca.sample_async`, the remaining

# classical code will be executed while the job is being handled

# by Orca. This is ideal when submitting via a queue over

# the cloud.

print("Submitting to ORCA Server asynchronously")

async_results = cudaq.orca.sample_async(input_state, loop_lengths, bs_angles,

n_samples)

# ... more classical code to run ...

# We can either retrieve the results later in the program with

# ```

# async_counts = async_results.get()

# ```

# or we can also write the job reference (`async_results`) to

# a file and load it later or from a different process.

file = open("future.txt", "w")

file.write(str(async_results))

file.close()

# We can later read the file content and retrieve the job

# information and results.

time.sleep(0.2) # wait for the job to be processed

same_file = open("future.txt", "r")

retrieved_async_results = cudaq.AsyncSampleResult(str(same_file.read()))

counts = retrieved_async_results.get()

print(counts)

// Compile and run with:

// ```

// nvq++ --target orca --orca-url $ORCA_ACCESS_URL orca.cpp -o out.x && ./out.x

// ```

// To use the ORCA Computing target you will need to set the ORCA_ACCESS_URL

// environment variable or pass the URL to the `--orca-url` flag.

#include <chrono>

#include <cudaq.h>

#include <cudaq/orca.h>

#include <fstream>

#include <iostream>

#include <thread>

int main() {

using namespace std::this_thread; // sleep_for, sleep_until

using namespace std::chrono_literals; // `ns`, `us`, `ms`, `s`, `h`, etc.

// A time-bin boson sampling experiment: An input state of 4 indistinguishable

// photons mixed with 4 vacuum states across 8 time bins (modes) enter the

// time bin interferometer (TBI). The interferometer is composed of two loops

// each with a beam splitter (and optionally with a corresponding phase

// shifter). Each photon can either be stored in a loop to interfere with the

// next photon or exit the loop to be measured. Since there are 8 time bins

// and 2 loops, there is a total of 14 beam splitters (and optionally 14 phase

// shifters) in the interferometer, which is the number of controllable

// parameters.

// half of 8 time bins is filled with a single photon and the other half is

// filled with the vacuum state (empty)

std::vector<std::size_t> input_state = {1, 0, 1, 0, 1, 0, 1, 0};

// The time bin interferometer in this example has two loops, each of length 1

std::vector<std::size_t> loop_lengths = {1, 1};

// helper variables to calculate the number of beam splitters and phase

// shifters needed in the TBI

std::size_t sum_loop_lengths{std::accumulate(

loop_lengths.begin(), loop_lengths.end(), static_cast<std::size_t>(0))};

const std::size_t n_loops = loop_lengths.size();

const std::size_t n_modes = input_state.size();

const std::size_t n_beam_splitters = n_loops * n_modes - sum_loop_lengths;

// beam splitter angles (created as a linear spaced vector of angles)

std::vector<double> bs_angles =

cudaq::linspace(M_PI / 3, M_PI / 6, n_beam_splitters);

// Optionally, we can also specify the phase shifter angles (created as a

// linear spaced vector of angles), if the system includes phase shifters

// ```

// std::vector<double> ps_angles = cudaq::linspace(M_PI / 3, M_PI / 5,

// n_beam_splitters);

// ```

// we can also set number of requested samples

int n_samples = 10000;

// Submit to ORCA synchronously (e.g., wait for the job result to be

// returned before proceeding with the rest of the execution).

std::cout << "Submitting to ORCA Server synchronously" << std::endl;

auto counts =

cudaq::orca::sample(input_state, loop_lengths, bs_angles, n_samples);

// Print the results

counts.dump();

// If the system includes phase shifters, the phase shifter angles can be

// included in the call

// ```

// auto counts = cudaq::orca::sample(input_state, loop_lengths, bs_angles,

// ps_angles, n_samples);

// ```

// Alternatively we can submit to ORCA asynchronously (e.g., continue

// executing code in the file until the job has been returned).

std::cout << "Submitting to ORCA Server asynchronously" << std::endl;

auto async_results = cudaq::orca::sample_async(input_state, loop_lengths,

bs_angles, n_samples);

// Can write the future to file:

{

std::ofstream out("saveMe.json");

out << async_results;

}

// Then come back and read it in later.

cudaq::async_result<cudaq::sample_result> readIn;

std::ifstream in("saveMe.json");

in >> readIn;

sleep_for(200ms); // wait for the job to be processed

// Get the results of the read in future.

auto async_counts = readIn.get();

async_counts.dump();

return 0;

}

Pasqal¶

The following code illustrates how to run kernels on Pasqal’s backends.

import cudaq

from cudaq.operators import RydbergHamiltonian, ScalarOperator

from cudaq.dynamics import Schedule

# This example illustrates how to use Pasqal's EMU_MPS emulator over Pasqal's cloud via CUDA-Q.

#

# To obtain the authentication token for the cloud we recommend logging in with

# Pasqal's Python SDK. See our documentation https://docs.pasqal.com/cloud/ for more.

#

# Contact Pasqal at help@pasqal.com or through https://community.pasqal.com for assistance.

#

# Visit the documentation portal, https://docs.pasqal.com/, to find further

# documentation on Pasqal's devices, emulators and the cloud platform.

#

# For more details on the EMU_MPS emulator see the documentation of the open-source

# package: https://pasqal-io.github.io/emulators/latest/emu_mps/.

from pasqal_cloud import SDK

import os

# We recommend leaving the password empty in an interactive session as you will be

# prompted to enter it securely via the command line interface.

sdk = SDK(

username=os.environ.get("PASQAL_USERNAME"),

password=os.environ.get("PASQAL_PASSWORD", None),

)

os.environ["PASQAL_AUTH_TOKEN"] = str(sdk.user_token())

# It is also mandatory to specify the project against which the execution will be billed.

# Uncomment this line to set it from Python, or export it as an environment variable

# prior to execution. You can find your projects here: https://portal.pasqal.cloud/projects.

# ```

# os.environ['PASQAL_PROJECT_ID'] = 'your project id'

# ```

# Set the target including specifying optional arguments like target machine

cudaq.set_target("pasqal",

machine=os.environ.get("PASQAL_MACHINE_TARGET", "EMU_MPS"))

# ```

## To target QPU set FRESNEL as the machine, see our cloud portal for latest machine names

# cudaq.set_target("pasqal", machine="FRESNEL")

# ```

# Define the 2-dimensional atom arrangement

a = 5e-6

register = [(a, 0), (2 * a, 0), (3 * a, 0)]

time_ramp = 0.000001

time_max = 0.000003

# Times for the piece-wise linear waveforms

steps = [0.0, time_ramp, time_max - time_ramp, time_max]

schedule = Schedule(steps, ["t"])

# Rabi frequencies at each step

omega_max = 1000000

delta_end = 1000000

delta_start = 0.0

omega = ScalarOperator(lambda t: omega_max

if time_ramp < t.real < time_max else 0.0)

# Global phase at each step

phi = ScalarOperator.const(0.0)

# Global detuning at each step

delta = ScalarOperator(lambda t: delta_end

if time_ramp < t.real < time_max else delta_start)

async_result = cudaq.evolve_async(RydbergHamiltonian(atom_sites=register,

amplitude=omega,

phase=phi,

delta_global=delta),

schedule=schedule,

shots_count=100).get()

async_result.dump()

## Sample result

# ```

# {'001': 16, '010': 23, '100': 19, '000': 42}

# ```

// Compile and run with:

// ```

// nvq++ --target pasqal pasqal.cpp -o out.x

// ./out.x

// ```

// Assumes a valid set of credentials (`PASQAL_AUTH_TOKEN`, `PASQAL_PROJECT_ID`)

// have been set.

#include "cudaq/algorithms/evolve.h"

#include "cudaq/algorithms/integrator.h"

#include "cudaq/operators.h"

#include "cudaq/schedule.h"

#include <cmath>

#include <map>

#include <vector>

// This example illustrates how to use `Pasqal's` EMU_MPS emulator over

// `Pasqal's` cloud via CUDA-Q. Contact Pasqal at help@pasqal.com or through

// https://community.pasqal.com for assistance.

int main() {

// Topology initialization

const double a = 5e-6;

std::vector<std::pair<double, double>> register_sites;

register_sites.push_back(std::make_pair(a, 0.0));

register_sites.push_back(std::make_pair(2 * a, 0.0));

register_sites.push_back(std::make_pair(3 * a, 0.0));

// Simulation Timing

const double time_ramp = 0.000001; // seconds

const double time_max = 0.000003; // seconds

const double omega_max = 1000000; // rad/sec

const double delta_end = 1000000;

const double delta_start = 0.0;

std::vector<std::complex<double>> steps = {0.0, time_ramp,

time_max - time_ramp, time_max};

cudaq::schedule schedule(steps, {"t"}, {});

// Basic Rydberg Hamiltonian

auto omega = cudaq::scalar_operator(

[time_ramp, time_max,

omega_max](const std::unordered_map<std::string, std::complex<double>>

¶meters) {

double t = std::real(parameters.at("t"));

return std::complex<double>(

(t > time_ramp && t < time_max) ? omega_max : 0.0, 0.0);

});

auto phi = cudaq::scalar_operator(0.0);

auto delta = cudaq::scalar_operator(

[time_ramp, time_max, delta_start,

delta_end](const std::unordered_map<std::string, std::complex<double>>

¶meters) {

double t = std::real(parameters.at("t"));

return std::complex<double>(

(t > time_ramp && t < time_max) ? delta_end : delta_start, 0.0);

});

auto hamiltonian =

cudaq::rydberg_hamiltonian(register_sites, omega, phi, delta);

// Evolve the system

auto result = cudaq::evolve(hamiltonian, schedule, 100);

result.sampling_result->dump();

return 0;

}

Quantinuum¶

The following code illustrates how to run kernels on Quantinuum’s backends.

import cudaq

import os

# You only have to set the target once! No need to redefine it for every

# execution call on your kernel.

# By default, we will submit to the Quantinuum syntax checker.

## NOTE: It is mandatory to specify the Nexus project by name or ID.

# Update and un-comment the line below.

# ```

# cudaq.set_target("quantinuum", project="nexus_project")

# ```

# Or use environment variable

# ```

# os.environ["QUANTINUUM_NEXUS_PROJECT"] = "nexus_project"

# ```

cudaq.set_target("quantinuum",

project=os.environ.get("QUANTINUUM_NEXUS_PROJECT", None))

# Create the kernel we'd like to execute on Quantinuum.

@cudaq.kernel

def kernel():

qvector = cudaq.qvector(2)

h(qvector[0])

x.ctrl(qvector[0], qvector[1])

# Submit to Quantinuum's endpoint and confirm the program is valid.

# Option A:

# By using the synchronous `cudaq.sample`, the execution of

# any remaining classical code in the file will occur only

# after the job has been executed by the Quantinuum service.

# We will use the synchronous call to submit to the syntax

# checker to confirm the validity of the program.

syntax_check = cudaq.sample(kernel)

if (syntax_check):

print("Syntax check passed! Kernel is ready for submission.")

# Now we can update the target to the Quantinuum emulator and

# execute our program.

cudaq.set_target("quantinuum",

machine="H2-1E",

project=os.environ.get("QUANTINUUM_NEXUS_PROJECT", None))

# Option B:

# By using the asynchronous `cudaq.sample_async`, the remaining

# classical code will be executed while the job is being handled

# by Quantinuum. This is ideal when submitting via a queue over

# the cloud.

async_results = cudaq.sample_async(kernel)

# ... more classical code to run ...

# We can either retrieve the results later in the program with

# ```

# async_counts = async_results.get()

# ```

# or we can also write the job reference (`async_results`) to

# a file and load it later or from a different process.

file = open("future.txt", "w")

file.write(str(async_results))

file.close()

# We can later read the file content and retrieve the job

# information and results.

same_file = open("future.txt", "r")

retrieved_async_results = cudaq.AsyncSampleResult(str(same_file.read()))

counts = retrieved_async_results.get()

print(counts)

// Compile and run with:

// ```

// nvq++ --target quantinuum --quantinuum-machine H2-1E --quantinuum-project \

// <nexus_project> quantinuum.cpp -o out.x && ./out.x

// ```

// Assumes a valid set of credentials have been stored.

// To first confirm the correctness of the program locally,

// Add a --emulate to the `nvq++` command above.

#include <cudaq.h>

#include <fstream>

// Define a simple quantum kernel to execute on Quantinuum.

struct ghz {

// Maximally entangled state between 5 qubits.

auto operator()() __qpu__ {

cudaq::qvector q(5);

h(q[0]);

for (int i = 0; i < 4; i++) {

x<cudaq::ctrl>(q[i], q[i + 1]);

}

}

};

int main() {

// Submit to Quantinuum asynchronously (e.g., continue executing

// code in the file until the job has been returned).

auto future = cudaq::sample_async(ghz{});

// ... classical code to execute in the meantime ...

// Can write the future to file:

{

std::ofstream out("saveMe.json");

out << future;

}

// Then come back and read it in later.

cudaq::async_result<cudaq::sample_result> readIn;

std::ifstream in("saveMe.json");

in >> readIn;

// Get the results of the read in future.

auto async_counts = readIn.get();

async_counts.dump();

// OR: Submit to Quantinuum synchronously (e.g., wait for the job

// result to be returned before proceeding).

auto counts = cudaq::sample(ghz{});

counts.dump();

}

Quantum Circuits, Inc.¶

The following code illustrates how to run kernels on Quantum Circuits’ backends.

import cudaq

# Make sure to export or otherwise present your user token via the environment,

# e.g., using export:

# ```

# export QCI_AUTH_TOKEN="your token here"

# ```

#

# The example will run on QCI's AquSim simulator by default.

cudaq.set_target("qci")

@cudaq.kernel

def teleportation():

# Initialize a three qubit quantum circuit

qubits = cudaq.qvector(3)

# Random quantum state on qubit 0.

rx(3.14, qubits[0])

ry(2.71, qubits[0])

rz(6.62, qubits[0])

# Create a maximally entangled state on qubits 1 and 2.

h(qubits[1])

cx(qubits[1], qubits[2])

cx(qubits[0], qubits[1])

h(qubits[0])

m1 = mz(qubits[0])

m2 = mz(qubits[1])

if m1 == 1:

z(qubits[2])

if m2 == 1:

x(qubits[2])

mz(qubits)

print(cudaq.sample(teleportation))

// Compile with

// ```

// nvq++ teleport.cpp --target qci -o teleport.x

// ```

//

// Make sure to export or otherwise present your user token via the environment,

// e.g., using export:

// ```

// export QCI_AUTH_TOKEN="your token here"

// ```

//

// Then run against the Seeker or AquSim with:

// ```

// ./teleport.x

// ```

#include <cudaq.h>

#include <iostream>

struct teleportation {

auto operator()() __qpu__ {

// Initialize a three qubit quantum circuit

cudaq::qvector qubits(3);

// Random quantum state on qubit 0.

rx(3.14, qubits[0]);

ry(2.71, qubits[0]);

rz(6.62, qubits[0]);

// Create a maximally entangled state on qubits 1 and 2.

h(qubits[1]);

cx(qubits[1], qubits[2]);

cx(qubits[0], qubits[1]);

h(qubits[0]);

if (mz(qubits[0])) {

z(qubits[2]);

}

if (mz(qubits[1])) {

x(qubits[2]);

}

/// NOTE: If the return statement is changed to `mz(qubits)`, the program

/// fails. Ref: https://github.com/NVIDIA/cuda-quantum/issues/3708

return mz(qubits[2]);

}

};

int main() {

auto results = cudaq::run(20, teleportation{});

std::cout << "Measurement results of the teleported qubit:\n[ ";

for (auto r : results)

std::cout << r << " ";

std::cout << "]\n";

return 0;

}

Quantum Machines¶

The following code illustrates how to run kernels on Quantum Machines’ backends.

import cudaq

import math

# The default executor is mock, use executor name to run on another backend (real or simulator).

# Configure the address of the QOperator server in the `url` argument, and set the `api_key`.

cudaq.set_target("quantum_machines",

url="http://host.docker.internal:8080",

api_key="1234567890",

executor="mock")

qubit_count = 5

# Maximally entangled state between 5 qubits

@cudaq.kernel

def all_h():

qvector = cudaq.qvector(qubit_count)

for i in range(qubit_count - 1):

h(qvector[i])

s(qvector[0])

r1(math.pi / 2, qvector[1])

mz(qvector)

# Submit synchronously

cudaq.sample(all_h).dump()

// Compile and run with:

// ```

// nvq++ --target quantum_machines quantum_machines.cpp -o out.x && ./out.x

// ```

// This will submit the job to the Quantum Machines OPX available in the address

// provider by `--quantum-machines-url`. By default, the action runs a on a mock

// executor. To execute or a real QPU please note the executor name by

// `--quantum-machines-executor`.

// ```

// nvq++ --target quantum_machines --quantum-machines-url

// "https://iqcc.qoperator.qm.co" \

// --quantum-machines-executor iqcc quantum_machines.cpp -o out.x

// ./out.x

// ```

// Assumes a valid set of credentials have been set prior to execution.

#include "math.h"

#include <cudaq.h>

#include <fstream>

// Define a simple quantum kernel to execute on Quantum Machines OPX.

struct all_h {

// Maximally entangled state between 5 qubits.

auto operator()() __qpu__ {

cudaq::qvector q(5);

for (int i = 0; i < 4; i++) {

h(q[i]);

}

s(q[0]);

r1(M_PI / 2, q[1]);

mz(q);

}

};

int main() {

// Submit asynchronously (e.g., continue executing code in the file until

// the job has been returned).

auto future = cudaq::sample_async(all_h{});

// ... classical code to execute in the meantime ...

// Get the results of the read in future.

auto async_counts = future.get();

async_counts.dump();

// OR: Submit synchronously (e.g., wait for the job

// result to be returned before proceeding).

auto counts = cudaq::sample(all_h{});

counts.dump();

}

QuEra Computing¶

The following code illustrates how to run kernels on QuEra’s backends.

import cudaq

from cudaq.operators import RydbergHamiltonian, ScalarOperator

from cudaq.dynamics import Schedule

import numpy as np

## NOTE: QuEra Aquila system is available via Amazon Braket.

# Credentials must be set before running this program.

# Amazon Braket costs apply.

# This example illustrates how to use QuEra's Aquila device on Braket with CUDA-Q.

# It is a CUDA-Q implementation of the getting started materials for Braket available here:

# https://docs.aws.amazon.com/braket/latest/developerguide/braket-get-started-hello-ahs.html

cudaq.set_target("quera")

# Define the 2-dimensional atom arrangement

a = 5.7e-6

register = []

register.append(tuple(np.array([0.5, 0.5 + 1 / np.sqrt(2)]) * a))

register.append(tuple(np.array([0.5 + 1 / np.sqrt(2), 0.5]) * a))

register.append(tuple(np.array([0.5 + 1 / np.sqrt(2), -0.5]) * a))

register.append(tuple(np.array([0.5, -0.5 - 1 / np.sqrt(2)]) * a))

register.append(tuple(np.array([-0.5, -0.5 - 1 / np.sqrt(2)]) * a))

register.append(tuple(np.array([-0.5 - 1 / np.sqrt(2), -0.5]) * a))

register.append(tuple(np.array([-0.5 - 1 / np.sqrt(2), 0.5]) * a))

register.append(tuple(np.array([-0.5, 0.5 + 1 / np.sqrt(2)]) * a))

time_max = 4e-6 # seconds

time_ramp = 1e-7 # seconds

omega_max = 6300000.0 # rad / sec

delta_start = -5 * omega_max

delta_end = 5 * omega_max

# Times for the piece-wise linear waveforms

steps = [0.0, time_ramp, time_max - time_ramp, time_max]

schedule = Schedule(steps, ["t"])

# Rabi frequencies at each step

omega = ScalarOperator(lambda t: omega_max

if time_ramp < t.real < time_max else 0.0)

# Global phase at each step

phi = ScalarOperator.const(0.0)

# Global detuning at each step

delta = ScalarOperator(lambda t: delta_end

if time_ramp < t.real < time_max else delta_start)

async_result = cudaq.evolve_async(RydbergHamiltonian(atom_sites=register,

amplitude=omega,

phase=phi,

delta_global=delta),

schedule=schedule,

shots_count=10).get()

async_result.dump()

## Sample result

# ```

# {

# __global__ : { 12121222:1 21202221:1 21212121:2 21212122:1 21221212:1 21221221:2 22121221:1 22221221:1 }

# post_sequence : { 01010111:1 10101010:2 10101011:1 10101110:1 10110101:1 10110110:2 11010110:1 11110110:1 }

# pre_sequence : { 11101111:1 11111111:9 }

# }

# ```

## Interpreting result

# `pre_sequence` has the measurement bits, one for each atomic site, before the

# quantum evolution is run. The count is aggregated across shots. The value is

# 0 if site is empty, 1 if site is filled.

# `post_sequence` has the measurement bits, one for each atomic site, at the

# end of the quantum evolution. The count is aggregated across shots. The value

# is 0 if atom is in Rydberg state or site is empty, 1 if atom is in ground

# state.

# `__global__` has the aggregate of the state counts from all the successful

# shots. The value is 0 if site is empty, 1 if atom is in Rydberg state (up

# state spin) and 2 if atom is in ground state (down state spin).

// Compile and run with:

// ```

// nvq++ --target quera quera_basic.cpp -o out.x

// ./out.x

// ```

// Assumes a valid set of credentials have been stored.

#include "cudaq/algorithms/evolve.h"

#include "cudaq/algorithms/integrator.h"

#include "cudaq/schedule.h"

#include <cmath>

#include <map>

#include <vector>

// NOTE: QuEra Aquila system is available via Amazon Braket.

// Credentials must be set before running this program.

// Amazon Braket costs apply.

// This example illustrates how to use QuEra's Aquila device on Braket with

// CUDA-Q. It is a CUDA-Q implementation of the getting started materials for

// Braket available here:

// https://docs.aws.amazon.com/braket/latest/developerguide/braket-get-started-hello-ahs.html

int main() {

// Topology initialization

const double a = 5.7e-6;

std::vector<std::pair<double, double>> register_sites;

auto make_coord = [a](double x, double y) {

return std::make_pair(x * a, y * a);

};

register_sites.push_back(make_coord(0.5, 0.5 + 1.0 / std::sqrt(2)));

register_sites.push_back(make_coord(0.5 + 1.0 / std::sqrt(2), 0.5));

register_sites.push_back(make_coord(0.5 + 1.0 / std::sqrt(2), -0.5));

register_sites.push_back(make_coord(0.5, -0.5 - 1.0 / std::sqrt(2)));

register_sites.push_back(make_coord(-0.5, -0.5 - 1.0 / std::sqrt(2)));

register_sites.push_back(make_coord(-0.5 - 1.0 / std::sqrt(2), -0.5));

register_sites.push_back(make_coord(-0.5 - 1.0 / std::sqrt(2), 0.5));

register_sites.push_back(make_coord(-0.5, 0.5 + 1.0 / std::sqrt(2)));

// Simulation Timing

const double time_max = 4e-6; // seconds

const double time_ramp = 1e-7; // seconds

const double omega_max = 6.3e6; // rad/sec

const double delta_start = -5 * omega_max;

const double delta_end = 5 * omega_max;

std::vector<std::complex<double>> steps = {0.0, time_ramp,

time_max - time_ramp, time_max};

cudaq::schedule schedule(steps, {"t"}, {});

// Basic Rydberg Hamiltonian

auto omega = cudaq::scalar_operator(

[time_ramp, time_max,

omega_max](const std::unordered_map<std::string, std::complex<double>>

¶meters) {

double t = std::real(parameters.at("t"));

return std::complex<double>(

(t > time_ramp && t < time_max) ? omega_max : 0.0, 0.0);

});

auto phi = cudaq::scalar_operator(0.0);

auto delta = cudaq::scalar_operator(

[time_ramp, time_max, delta_start,

delta_end](const std::unordered_map<std::string, std::complex<double>>

¶meters) {

double t = std::real(parameters.at("t"));

return std::complex<double>(

(t > time_ramp && t < time_max) ? delta_end : delta_start, 0.0);

});

auto hamiltonian =

cudaq::rydberg_hamiltonian(register_sites, omega, phi, delta);

// Evolve the system

auto result = cudaq::evolve_async(hamiltonian, schedule, 10).get();

result.sampling_result->dump();

return 0;

}